Our Press Release announcing a first-ever AI certification pilot marks a huge milestone in RAII’s journey and leadership in global responsible AI certification. Read on to learn all about it.

Consumers and businesses are far more likely to use, trust, and engage with technology if they know it is developed and used responsibly. As companies deploy AI systems for functions ranging from credit scoring to hiring, there is an increasing need for Responsible AI practices that can mitigate risks and promote safety, fairness, explainability, and other considerations and values. Yet implementation can be challenging without support or benchmarks.

The Responsible AI Institute (RAI Institute) was founded three years ago to tackle the implementation gap for responsible AI adoption. RAII is led by Ashley Casovan, who led the development of Canada’s Directive on Automated Decision-Making. Our Executive Chairman, Manoj Saxena, previously served as the first General Manager of IBM Watson. And recently, Miriam Vogel – who serves on RAII’s Governing Board – was asked to chair the National AI Advisory Committee (NAIAC). The NAIAC advises President Biden and the National AI Initiative Office on AI-related matters.

Based on the strength of RAII’s community, expert consultations, member engagements, working groups, and relationships with industry and academic researchers, RAII has developed an Implementation Framework that translates AI principles and frameworks from the Organisation for Economic Co-operation and Development (OECD), the United Nations Educational, Scientific, and Cultural Organization (UNESCO), National Institute of Standards and Technology (NIST), and others into practice. That’s why we are proud to announce the launch of our first-of-its-kind AI certification pilot with the Standards Council of Canada.

Why Certification Matters

The legal and regulatory landscape for AI is evolving rapidly. For example, the EU’s proposed AI Act uses a risk-based approach to determine compliance requirements for HR systems. The AI Act relies on conformity assessments – both pre-market and on an ongoing basis – to support its legal framework.

RAII has developed a Certification Program and conformity assessment scheme based on its Implementation Framework and a harmonized review with the American National Standards Institute (ANSI) and United Kingdom Accreditation Service (UKAS). The Certification Program is always grounded in a specific use case and aligns with principles, laws, guidelines, research, and best practices relevant to the industry, function, and jurisdiction in which an AI system is developed and deployed.

Our Pilot with Standards Council of Canada

RAII will work with SCC to determine requirements for the development of a conformity assessment program for AI management systems. The pilot will leverage the ISO’s AI Management System standard (ISO 42001), which is currently under development, to provide an AI Management System Accreditation Program in Canada. Our results will help us finalize our AI certification requirements, helping promote AI systems that prioritize safety, fairness, and trustworthiness.

“As a result of this pilot, organizations will have clear guidance on how to establish, define and implement responsible AI practices in alignment with emerging regulations and standards, best-in-class research, and existing human rights-based laws and frameworks,” says Ashley Casovan, Executive Director of Responsible AI Institute.

Elias Rafoul, VP of Accreditation Services at SCC, says, “We are proud to be leading this first-of-its-kind pilot to understand what is required for AI management systems, and to evaluate needs for an end-to-end accreditation program for AI.”

How Our Certification Process Works

Our Certification Program operates at the use case-level and seeks to ensure compliance and alignment with relevant regulations and industry best practices in health, finance, labor, and other areas. Though our initial focus is on the US, UK, and Canada, we anticipate that it will become available in other geographies, too.

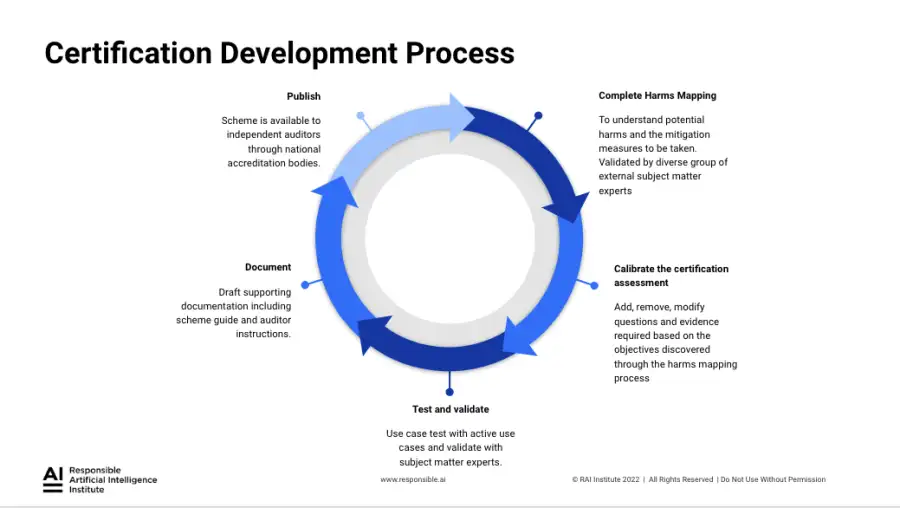

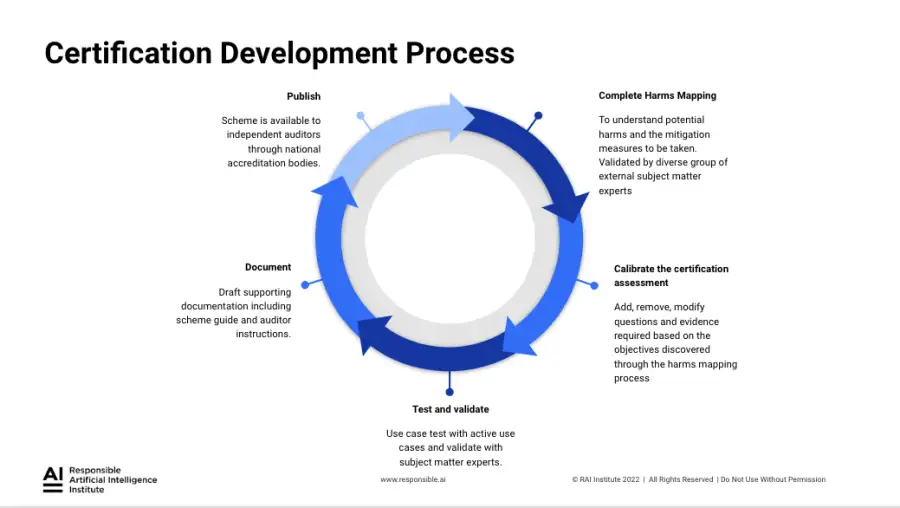

So far, we have focused on the development and procurement of AI systems related to: lending and collections, human resources, health diagnosis and treatment, and access to health care. As our work progresses, we calibrate our Implementation Framework to additional use cases. Here’s our process for calibrating our Implementation Framework for any given use case:

- Identify Harms – RAII identifies issues and potential harms related to the use case.

- Configure Generic Assessment – RAII configures our baseline assessment based on the harms mapping; laws, guidelines, and standards; and input from working groups, research, member engagements.

- Complete Certification Schema Inventory – RAII documents the calibrated assessment in a scheme guide and audit guide.

- Perform Audit – An accredited third-party auditor performs the audit.

- Provide Certification Results – The accredited auditor provides certification results to the client using the RAII certification assessment and automated reporting tools.

RAI Institute’s Leadership in this Space

As an independent and community-driven non-profit organization, RAII has been at the forefront of RAI efforts. RAI Institute’s approach is to focus on a specific use case and consult with industry, civil society, academia, standards bodies, policymakers, and regulators on how to refine and calibrate our Implementation Framework. The Implementation Framework includes the following dimensions:

- Bias and Fairness – AI systems should mitigate unwanted bias and drive inclusive growth to benefit people and the planet.

- System Operations – AI systems should use quality representative data appropriate to their domain while incorporating human support to ensure the system performance aligns with objectives.

- Consumer Protection -AI systems should respect data privacy and avoid using customer data beyond its intended use.

- Robustness – AI systems must function in a robust, secure, and safe way throughout their life cycles.

- Explainability and Interpretability – AI systems should be explainable to ensure that people understand AI-based outcomes and can challenge them.

- Accountability – Organizations developing or operating AI systems should be held accountable for their proper functioning.

What’s Next

After this huge step forward, we’ll continue to calibrate our assessment to real-world uses and the latest developments in the field.

We’re excited to have arrived here through our RAI Institute community’s collaboration, partnership, and support. Stay tuned for more details about the pilot as we join the CogX Festival during London Tech Week from June 13th-17th, 2022.