Over the last few months, domain experts and stakeholders have come together with the Responsible AI Institute (RAI Institute) to explore how the RAI Institute Implementation Framework can be applied to an Artificial Intelligence (“AI”) application for the automated detection of skin disease. Following the development of guidelines for the use of AI in dermatology diagnosis and clinical (see here for further discussion), a working group came together to test the guidelines and the RAII Implementation Framework on a real use case.

Members of the group

Members of the group include domain experts from a variety of organizations with expertise in artificial intelligence research and development, clinical dermatology and data ethics. These organizations include:

- Memorial Sloan Kettering,

- IBM Research,

- Data Nutrition Project,

- Partnership on AI,

- Skinopathy, and

- Responsible AI Institute.

Key shared objectives

The overall goal of the group is to help build AI “the right way” by identifying and documenting possible risks that arise from the development and deployment of AI systems, in this case, automated skin disease detection AI systems. By adhering to agreed-upon standards and processes, we believe that both the path to responsible AI and the product itself will improve, leading to fewer harms overall. The group is also working to test the RAII assessment and certification program against CLEAR Derm guidelines developed by the International Skin Imaging Collaboration (ISIC) AI working group to ensure that our program is robust and in line with relevant standards and guidelines. In order to do this effectively, RAII is developing and testing its harms mapping with Skinopathy’s GetSkinHelp app, an AI application use case that is currently in deployment for the purpose of detecting skin diseases in patients in hopes of understanding the extent to which the combined assessment and checklists are effectively identifying risks and providing adequate guardrails for AI systems.

Progress to date

Initially, we established a lifecycle phase framework reflective of the lifecycle system used to design and develop AI systems within which risks and harms were to be identified and documented. Delineating risks according to the AI lifecycle will help facilitate the effective identification and management of risks associated with AI systems by developers and deployers as well as other stakeholders.

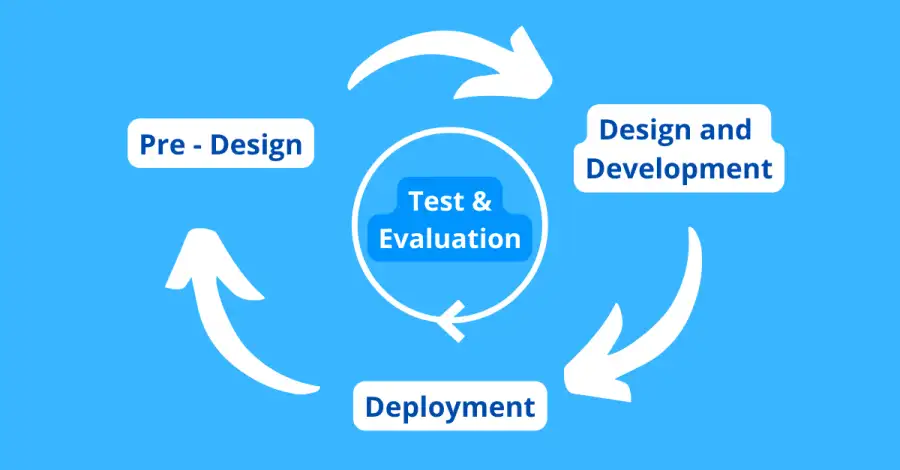

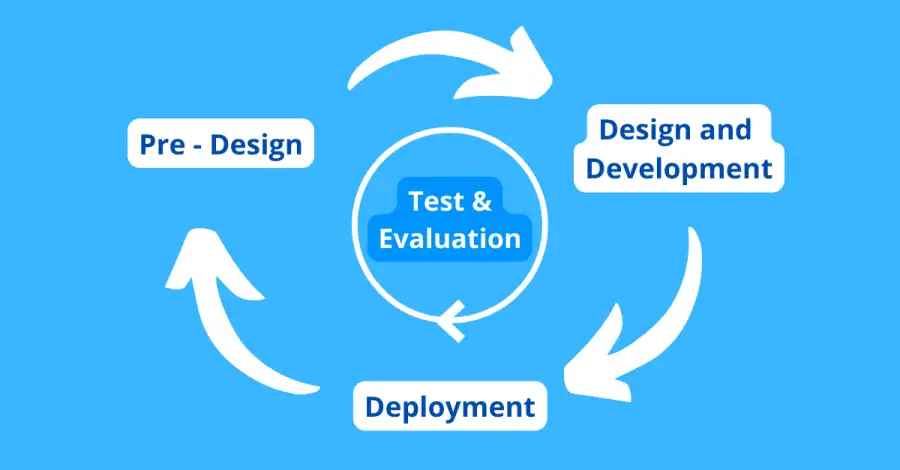

We adopted the National Institute of Standards and Technology (“NIST”) AI lifecycle framework (as contained in the special publication titled ‘Towards a Standard for Identifying and Managing Bias in Artificial Intelligence’) which identifies the Pre-Design; Design and Development and Deployment stages, with continuous testing and evaluation throughout the entire AI development lifecycle. The Pre-Design phase refers to the specification, collection and processing of data which would form the basis of an AI model; the Design and Development phase is the phase during which an AI model is designed, developed and trained; and the Deployment phase is the phase wherein the model is deployed in society, and its performance monitored and evaluated.

This lifecycle formed the framework for RAII’s harms mapping process and guide. Our harms mapping process includes the identification of relevant categories including identification of risks associated with automated skin detection; providing examples of downstream harm or impact to relevant stakeholders such as beneficiaries, subjects or users of the AI system, in this instance, patients and doctors; and relevant mitigation strategies for eliminating or reducing the risk or harm. For instance, we identified the risk of underrepresentation of darker skin tones in existing clinical materials and datasets creating representational bias and creating downstream harm of darker skin toned patients receiving inaccurate diagnostic results from automated AI models. To mitigate this risk, we suggest broadening data collection or sourcing approaches to include a variety of demographic groups. To support the development of harms maps by stakeholders, we also created a guide to provide further guidance on each category and encourage users to be reflective and thoughtful about the potential immediate and downstream issues, risks and harms that may arise from their AI application.

Next steps

Following the completion of the risk and harms mapping process, the working group will develop and test benchmarks and assessments that align with the CLEAR Derm Guidelines and with the RAI Institute Implementation Framework. This reiterative process will include the validation of provisional benchmarks and assessments with subject matter experts and further testing. Eventually, the group intends to share its findings and insights with researchers in industry and academia and with policymakers.