Authors: Credo – AI Lucia Gamboa and Evi Fuelle, RAI Institute – Patrick McAndrew and Hadassah Drukarch.

Standards play a crucial role in the development and adoption of new and emerging technologies. To paraphrase Zoolander, “standards are so hot right now!” In the field of AI, where terms such as “trustworthiness,” “performance,” “robustness,” and “accuracy” take on new and important meanings that help businesses and consumers decide which AI models to trust, policymakers are necessarily looking to the standards ecosystem to guide AI actors on how to implement high-level principles and policies. Standards help establish common definitions for AI governance practices such as risk management, and the definition of “human oversight” for AI systems, making it easier and faster to satisfy AI regulatory requirements.

Various standards can serve as the basis for “trustworthy design,” providing objectives, terminology, and recommended actions to manage AI risks. Some of the most relevant AI standards-setting bodies include International Standards Organization (ISO), the Standards Council of Canada (SCC), the Institute of Electrical and Electronics Engineers (IEEE), and British Standards Institution (BSI) Group.

A particular distinction that this blog explores is the difference between standards that provide organizational level guidance, and those that include product level requirements or controls. This distinction will help organizations understand how standards development processes differ globally and the difference between existing standards –such as ISO/IEC 23894 Guidance on Risk Management, ISO/IEC 42001 AI Management System standard or IEEE’s 7000 series– and the EU AI Act’s forthcoming harmonized standards.

What are the different types of AI standards?

AI standards can be broadly categorized into organizational and product level standards. Each category serves distinct yet interconnected purposes in the governance and regulation of AI.

Organizational Level AI Standards

These standards focus on the overarching processes, policies, and practices that organizations implement to govern the development, deployment, and use of AI technologies. Organizational level standards may encompass a wide range of areas, including:

- Responsible AI Policies: Establishing policies and guidelines for AI development and deployment within an organization.

- Risk Management: Implementing risk assessment and mitigation strategies to address potential harms associated with AI systems.

- Transparency and Accountability: Ensuring transparency and accountability in AI decision-making processes, including data usage, algorithmic transparency, and explainability.

- Data Governance: Establishing data governance frameworks to manage data quality, privacy, security, and access throughout the AI lifecycle.

- Training and Education: Providing training and education for employees on responsible AI principles, responsible data practices, and AI-related regulations.

Organizational level AI standards are typically broad in scope and are designed to guide the overall AI governance framework within an organization, ensuring alignment with responsible AI considerations.

Product Level AI Standards

In contrast, product level AI standards focus specifically on the development, testing, and certification of individual AI systems. These standards may include requirements related to:

- Performance and Accuracy: Ensuring that AI systems meet specified performance metrics and accuracy thresholds for their intended use cases.

- Safety and Reliability: Implementing safety measures to prevent harm to users or the environment, as well as ensuring the reliability of AI systems under various conditions.

- Interoperability: Facilitating interoperability between different AI systems and compatibility with existing technologies and standards.

- Security and Privacy: Incorporating security measures to protect against unauthorized access, data breaches, and privacy violations.

AI product level standards are often more specific and technical in nature, focusing on the design and engineering aspects of AI systems and their use case to ensure their safety, quality, and alignment with regulatory requirements.

How do standards development processes differ globally?

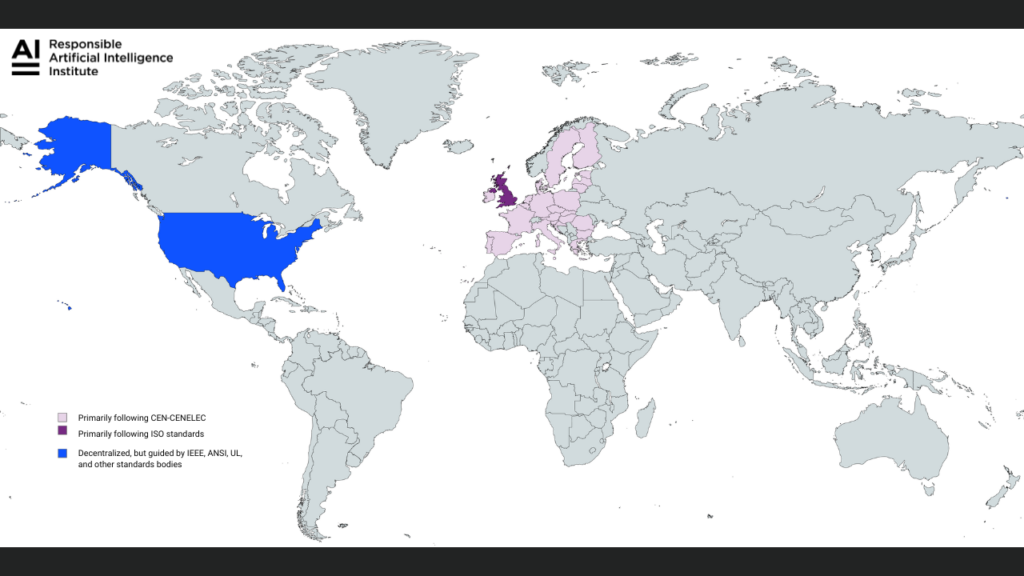

The United States (U.S.), European Union (EU), and United Kingdom (UK) have long adopted a standards-based approach for both product and organizational level standards and established mechanisms for cooperation to enable interoperability. For AI standards specifically, the UK has focused more on international standards developed by ISO through BSI Group while the EU continues its main body of work on standards through the European standardization organizations CEN and CENELEC (the European Committee for Standardization and the European Committee for Electrotechnical Standardization), as well as the European Telecommunications Standards Institute (ETSI).

Source: Responsible AI Institute

In the U.S., NIST (National Institute of Standards and Technology) is not strictly a standards setting body, but it has strong ties with the standards development community. Alongside the American National Standards Institute (ANSI), it coordinates and convenes private and public sector stakeholders to develop frameworks and guidance that feed into international standards development.

While approaches may vary slightly, different standards setting bodies have shared objectives to facilitate safety, interoperability, and competition. They also share the common goal that standards should be useful and implementable. In the EU, alignment with so-called “harmonized” AI standards will serve as a mechanism to evidence conformity with the EU AI Act.

How do EU standards fit into the global standards ecosystem?

While there are already AI standards that can serve as a basis for trustworthy and safe AI development, the forthcoming EU AI Act harmonized standards are expected to be:

- product level oriented;

- more closely integrated with the AI product lifecycle;

- specific and tailored to the risk definition outlined in the AI Act; and,

- sufficiently prescriptive with clear requirements.

The EU AI Act was developed as a horizontal, product-oriented regulation, and the request for harmonized standards within the EU AI Act marks a unique approach in which standards adherence is an element of conformity with the Regulation. Although EU harmonized standards are voluntary, the development of standards aligned with mandatory legal requirements can help mitigate uncertainties surrounding definitions and interpretations. Historically, these ambiguities have required clarification through legal proceedings.

Ten harmonized standards are being developed by CEN-CENELEC in collaboration with the European Telecommunications Standards Institute (ETSI) as part of the EU AI Act standardization request. These include:

- Risk management for AI systems

- Governance and quality of datasets used to build AI systems

- Record keeping through logging capabilities by AI systems

- Transparency and information provisions for users of AI systems

- Human oversight of AI systems

- Accuracy specifications for AI systems

- Robustness specifications for AI systems

- Cybersecurity specifications for AI systems

- Quality management systems for providers of AI systems, including post-market monitoring processes

- Conformity assessment for AI systems

What is the timeline for forthcoming AI standards?

Both ISO and CEN-CENELEC are expected to deliver different AI standards within the next year and a half and have cooperation agreements and work closely through joint technical committees to achieve alignment. CEN-CENELEC’s work will be focused on producing standardization deliverables that address underpinning EU legislation, policies, principles, and values, while ISO is expected to publish two global standards: ISO/IEC 42006, which outlines audit requirements for ISO/IEC 42001, and ISO/IEC 42005 on AI system impact assessments.

CEN-CENELEC is working in parallel to publish a first version of EU AI Act harmonized standards which must be finalized and ready for public comment by December 2024. By April 30, 2025, CEN-CENELEC is required to submit a Final Report to the European Commission, ensuring that EU standards and standardization deliverables are in conformity with EU law on fundamental rights and data protection. Finally, by December 2025, EU AI Act standards need to be available for adoption for enterprises to comply depending on their classification as high-risk developers or deployers and their use of general purpose AI.

Conclusion

Organizational level AI standards -such as ISO 42001- are typically broad in scope and are designed to guide the overall AI governance framework within an organization, ensuring alignment with regulatory requirements and responsible AI considerations. Product level AI standards –which logic and structure is closer to standards such as ISO 14971 for medical devices risk management– focus specifically on the development, testing, and certification of individual AI systems.

Several organizational components of compliance for the EU AI Act mirror those found in international standards, such as IEEE 7000 and ISO 42001. These include the establishment of a risk management system, maintenance of comprehensive technical documentation, and integration of human oversight. However, despite these commonalities, there remains a necessity to create additional standards that specifically address areas like risk measurement, testing and evaluation methodologies, information sharing throughout the AI lifecycle, and sector-specific applications, among others.

Enterprises must adopt AI standards at both the organizational and product levels to ensure comprehensive AI risk management and effectively identify and mitigate AI risks. Upcoming product-level AI standards will aid in achieving compliance with specific regulations, such as the EU AI Act. The evolving standards ecosystem highlights the need for organizations to stay informed about changing regulatory requirements to safely and securely develop and deploy AI across the enterprise.

About Credo AI

Credo AI is on a mission to empower organizations to responsibly build, adopt, procure, and use AI at scale. Credo AI’s pioneering AI Governance, Risk Management and Compliance platform helps organizations measure, monitor and manage AI risks, while ensuring compliance with emerging global regulations and standards, like the EU AI Act, NIST, and ISO. Credo AI keeps humans in control of AI, for better business and society. Founded in 2020, Credo AI has been recognized as a Fast Company’s Most Innovative AI Companies of 2024, Fast Company’s Next Big Thing in Tech 2023, CB Insights AI 100, CB Insights Most Promising Startup, Technology Pioneer by the World Economic Forum, and a top Intelligent App 40 by Madrona, Goldman Sachs, Microsoft and Pitchbook. To learn more, visit credo.ai or follow us on LinkedIn.

Credo AI Press Contact

Caty Bleyleben

CMAND

caty@cmand.co

About the Responsible AI Institute

Founded in 2016, Responsible AI Institute (RAI Institute) is a global and member-driven non-profit dedicated to enabling successful responsible AI efforts in organizations. We accelerate and simplify responsible AI adoption by providing our members with AI conformity assessments, benchmarks and certifications that are closely aligned with global standards and emerging regulations.

Members include leading companies such as Amazon Web Services, Boston Consulting Group, KPMG, ATB Financial and many others dedicated to bringing responsible AI to all industry sectors.

Media Contact

Nicole McCaffrey

Head of Marketing, Responsible AI Institute

+1 (440) 785-3588

Follow Responsible AI Institute on Social Media

X (formerly Twitter)