How can organizations ensure that their AI systems are trustworthy?

Assurance services have become commonplace for financial operations, compliance, IT security, and organization performance — now they are emerging for AI systems. Certain components, such as AI risk assessments, are already required by regulations such as the EU AI Act. As AI agents, copilots, and other high-risk AI applications become more widely adopted by organizations, AI ethics, trustworthiness, and risks have also become top of mind for enterprise AI practitioners. But how can organizations ensure that they are deploying AI systems safely? And how can they ensure that these AI systems ultimately provide value for their stakeholders and customers? This piece specifically focuses on AI Assurance within the scope of enterprises deploying AI systems across industries, rather than AI companies themselves.

Responsible AI (RAI) is the overarching umbrella that encompasses practices and objectives to implement ethics, trustworthiness, AI governance, and compliance. This also encompasses AI assurance, which has also recently emerged to refer to the specific products, services, and mechanisms that can assist in operationalizing RAI.

This blog explores the following topics:

- The current state of AI assurance technologies and mechanisms

- How to identify relevant AI assurance mechanisms

- How to assess vendors and developments in the AI assurance space

- The future of AI assurance and implications for AI practitioners

This piece demystifies the AI Assurance space and explores how these mechanisms can help organizations ensure that their AI systems are trustworthy, robust, and compliant according to current regulations, requirements, and identified risks. It also examines current and emerging trends related to AI certifications, audits, assessments, providing clarity to an otherwise fragmented and active area of research and vendors. This piece is relevant for organizations at all stages of their AI adoption and RAI implementation journey, offering legal, risk, compliance, and AI governance teams insights into how AI assurance can evolve and its implications for AI development within their organization.

What is AI Assurance?

AI assurance is gaining traction because, while many organizations are adopting RAI principles and commitments, there is a need to demonstrate adherence in practice. The main drivers of AI assurance include current and emerging AI-specific regulations, increased harms, risks, and potential failure associated with generative and frontier AI systems, and a need for increased stakeholder trust and transparency. The UK Department for Science, Innovation and Technology, in their report on the growth of the AI Assurance market in the UK, define AI assurance as providing the tools necessary to measure the risks of AI systems, in order to ensure safety and meet regulatory and compliance requirements. This definition emphasizes tools and mechanisms that are specifically used to increase trust and transparency in AI systems, including risk assessments, algorithmic audits, certifications, and more.

AI assurance mechanisms address multiple levels of risk, including governance, organizational, system, and model levels. While these mechanisms do not provide a 100% guarantee that AI systems are free of risks and vulnerabilities, they offer comprehensive, end-to-end approaches to addressing compliance and regulatory requirements. They also identify opportunities for improvement in AI systems and organizational practices. In short, AI assurance can allow organizations to better understand potential AI risk sources and provides them with valuable information to increase their resilience and AI risk management posture. While technical model evaluations is still an active and highly fragmented area of research, organizations can leverage insights on best practices to mitigate potential issues.

Levels of AI Assurance

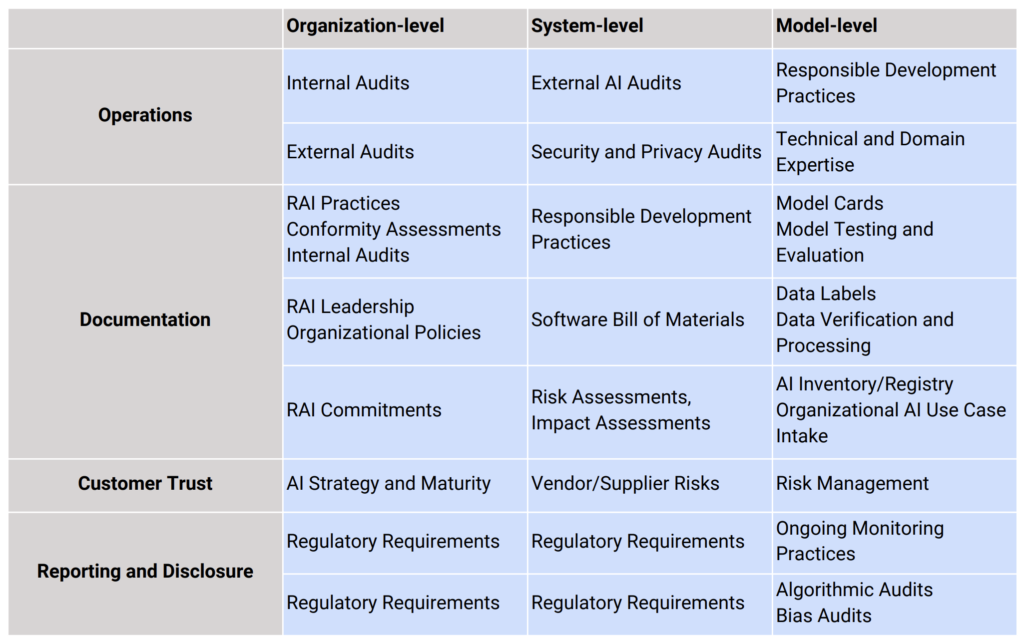

AI assurance can be categorized across several key dimensions, including operations, documentation and records, customer trust, and reporting and disclosure. Well-established mechanisms (below, in bold) include risk assessments, impact assessments, bias or algorithmic audits, and AI inventories/registries. Additionally, a variety of model and system-level practices have gained popularity in recent years, such as model cards, data labels, and other documentation methods for AI systems.

Common approaches to AI assurance involve assessing individual systems, models, algorithms, or overall organizational practices against specific standards such as the EU AI Act or ISO 42001. Different methods will require different levels of access to organizational and AI systems and model information. For in-depth algorithmic audits, for example, comprehensive audits require access to data and system development and deployment artifacts, as well as detailed documentation from engineering teams.

There are also well-established assurance mechanisms that are not specific to AI systems, but rather serve to complement AI assurance efforts, such as security and privacy audits, which provide the foundational layer for AI system security audits. Emerging mechanisms include conformity and regulatory assessments that evaluate organizational and system-level practices against various standards and AI regulations. Other approaches focus on specific risks, such as security risks, ethical considerations, personnel risks, or broader impacts, such as to certain communities, the environment, or other external stakeholders.

While technical model evaluations have gained traction in the research community, it is equally essential to address the entire AI system life cycle and the surrounding organizational activities. This is particularly important given the current limitations of technical evaluations and the broader regulatory requirements. Significant gaps remain in assessing organization’s RAI practices and development processes across the different levels depicted above. Additionally, AI assurance necessitates evaluating all components and vendors involved in the development process and supply chain to address regulatory concerns, potential risks, and emergent capabilities in these systems.

| In 2025, we’re bringing together enterprises and vendors to shape AI-specific procurement and supplier assessments, building on our trusted guidance. Are you an enterprise deploying high-risk AI systems or a vendor offering AI platforms? Don’t miss the opportunity to participate in our upcoming work on vendor and supplier assessments. Connect with us through this form to get involved! |

The current regulatory landscape has been a natural driver of RAI and AI assurance activities. The EU AI Act has set forth important requirements especially for high-risk systems, with a General Purpose AI Code of Practice currently under development. The Responsible AI Institute (RAI Institute) has contributed to these consultations, and harmonized standards are expected to take off in 2-3 years. In the United States, various laws have been enacted or are in progress at the state level, addressing specific AI systems and harms (e.g. theColorado AI Act, and the new Utah Artificial Intelligence Policy Act).

In addition to formal regulations, organizations and government agencies have developed general frameworks and guidelines for AI systems. However, AI assurance mechanisms provide specific approaches that organizations can adopt for their needs. Finally, enterprises must also consider domain-specific regulations before delivering AI services or using AI products in any capacity. Examples include New York’s AI in Hiring Law and California’s AI Transparency Act. With substantial penalties for non-compliance, the global diversity of AI regulations, coupled with industry-specific requirements and pending legislation, poses a complex challenge for organizations striving to meet these obligations.

The potential for AI failure and harm, especially with generative AI systems that process unstructured, multimodal information, and can produce unpredictable outputs, has added additional complexity to enterprise AI initiatives. Such failures can severely impact individuals’ health, safety, and rights. They can also affect critical infrastructure, where interconnected software and physical infrastructure may trigger widespread cascading effects. Finally, AI failures can disrupt sensitive business operations, affecting product and service delivery, development, and day-to-day activities. These disruptions can impact financial performance, operational security, confidential data, and critical business activities.

Stakeholder trust and transparency are important drivers of emerging AI assurance mechanisms, as perception of non-compliance can affect reputation and brand image, business and stakeholder relationships, future business growth, and customer trust. 84% of RAI experts surveyed by MIT Sloan, for example, were in favor of mandatory disclosures regarding enterprise use of AI for customers in order to maintain stakeholder trust.

AI assurance mechanisms, such as audits and assessments, can help organizations improve their AI systems, including model reliability, accuracy, and performance. Given that very few AI models actually make it to production due to issues detected at various stages of the AI development life cycle, these mechanisms enable organizations to maximize the benefits of AI, rather than merely following the technology hype without realizing the full potential of frientier systems.

A Holistic Evaluation of RAI Practices

What makes AI audits different from typical assurance services or security audits? The auditing and assurance industry is undergoing dramatic changes and transformation, with AI tools revolutionizing the audit process entirely. However, when it comes to auditing AI systems themselves, fully technical and automated approaches are insufficient due to often ambiguous requirements and subjective interpretation involved. While methods such as using LLMs for AI system auditing have been explored, these systems also require careful evaluation due to risks including lack of value and goal alignment, accountability, impartiality, and privacy/confidentiality risks. As a result, holistic third-party reviews and the integration of various stakeholder perspectives are essential to providing detailed insights for practitioners in light of broad requirements for AI systems.

Continuous audits and assessments are essential, moving beyond post-hoc checklists completed by developers. Effective approaches use a combination of mechanisms to address all of the layers of AI assurance previously depicted, especially for high-risk systems. Studies have affirmed that users favor third-party verification and assurance labels for high-risk AI systems to address stakeholder concerns. Achieving requires in-depth technical analysis and evaluation, multi-stakeholder collaboration, and interdisciplinary expertise to anticipate potential harms and mitigations effectively.

Traditionally, developers and engineering teams address legal, compliance and risk related requirements at the end of system development, often as a questionnaire at the end of the process. However, this reactive approach is increasingly inadequate for AI systems, given their unique risks. Instead, AI systems require proactive assurance mechanisms integrated before implementation. Additionally, traditional assurance processes, which provide a snapshot of compliance at a single point in time,, also fall short for AI systems that evolve and adapt.These static approaches fail to inspire long-term stakeholder trust. Continuous re-assessment and assurance practices are needed to evaluate system effectiveness and enforce internal policies in real time.

Ultimately, organizations will need to combine internal procedures with external, third-party verification of their systems and processes. A comprehensive approach includes (1) internal processes for compliance with AI regulations, (2) external audits for reporting and third-party attestation of RAI practices, as well as (3) tertiary assurance mechanisms for assessing stakeholder impacts.

While lack of a single global standard for RAI development and assurance creates a fragmented ecosystem, waiting for a “gold standard” regulation is not an option. Given the unpredictability and complexity of frontier AI systems, addressing multiple levels of AI governance, both at the organizational and system levels, can help organizations remain prepared for future challenges and potential system risks.

What about AI Certifications?

Enterprises face a complex landscape of technology-related certifications, and AI-specific certifications are becoming increasingly important in AI assurance. In theory, certifications offer a formal approach to AI audits, providing a stamp of approval for organizations and vendors. However, the unique nature of generative and frontier AI systems makes certifications significantly more complex. Without a global RAI standard in place, certifications must either align with specific frameworks or focus on particular goals. Harmonizing these diverse regulations and frameworks is difficult, as they cover anything from high-level organizational practices to detailed technical system requirements. Yet, assessing for all of these components is essential to establish RAI best practices and meet evolving regulatory demands..

While organizations might compare AI certifications to other security certifications, such as SOC 2, the scope of the two are significantly different. Security attestations look at a narrower set of themes and controls compared to assurance for AI systems. AI assurance, on the other hand, also includes security and data privacy controls specific to AI systems, along with many other themes specific to different stages of the system development, procurement, and deployment process. AI systems demand forward-thinking and dynamic assessments to address current and future risks of new model capabilities and use cases, such as unpredictable model performance and capabilities, novel risk categories, new modalities (text, image, audio, video), as well as new use cases (such as interconnected AI and physical infrastructure, chemicals, biological domains, and other potentially high-risk areas).

Existing certifications such as ISO 27001, SOC 2, Safety by Design, Privacy by Design serve as foundations for IT and compliance practices. However, assurance mechanisms go further by addressing:

- Stakeholders: Traditionally, security and IT compliance audits are owned by specific functions including Internal Audit, GRC, and IT, while AI audits involve diverse cross-functional stakeholders who are involved in different aspects of organizational strategy and implementation. These include legal, procurement, risk management, RAI, AI governance, engineering, and domain-specific product and business teams. In many organizations with lower levels of AI maturity, many of the key stakeholder positions have not even been created yet.

- Scope: Existing security and data privacy audits serve as the foundation for IT and compliance best practices. AI assurance mechanisms, on the other hand, allow organizations to further build upon this base to address unique considerations for AI systems and RAI practices across organization resources, processes, and governance structures.

- Applicability for AI systems and practices: AI assurance audits and mechanisms add another layer that address AI-specific concerns and emerging requirements. This ensures that companies are compliant with new regulations, from the EU AI Act to ISO standards for AI management systems. This goes beyond standards developed by industry bodies, which are primarily focused on deployment concerns. Additionally, requirements for AI systems will change depending on the risk level, jurisdiction, sector, as well as the company’s role(s), such as AI developers, providers, users, customers, and/or partners. Existing audits do not cover these aspects, resulting in a need for AI-specific assessments. Finally, given the often opaque nature of AI capabilities and systems, greater access to specific models, data, and development systems is necessary to accurately assess risks.

There are potential trade-offs when choosing AI certifications compared to adopting a mix of assurance methods tailored to an organization’s needs. One key consideration is the robustness and flexibility of the certification. Schemes must cover an extensive range of controls while remaining adaptable to new technological and regulatory developments. However, achieving external accreditation for these schemes — while enhancing credibility — can be time-consuming and hinder their agility in evolving with emerging requirements. AI certifications must also strike a balance between comprehensiveness and administrative burden. Excessive administrative requirements during the AI assurance process could deter organizations from addressing issues and mitigating risks, which is a critical factor for processes like AI reporting, auditing, and system/vendor evaluation. Holistic concerns related to AI systems often conflict with the granular focus of certification schemes, which are designed for highly specific outcomes. This tension is compounded by the dynamic nature of AI systems, where technical capabilities continuously evolve, and various entities—products, processes, individuals, and organizations—require evaluation. Narrowly focused certifications risk overlooking significant and unique risks or harms associated with AI systems.

While some controls across audit types and categories may overlap — such as those in standard security audits and AI-specific security requirements (e.g., the EU Cybersecurity Act) — this overlap is inevitable given the far-reaching impacts of AI systems. Holistic assessments are essential, as ethical AI development spans multiple domains. Focusing solely on one area risks providing organizations with a false sense of security while potentially exacerbating other issues. Key areas include supply chain and procurement, environmental considerations, and health, safety, and rights concerns. Research on AI auditors highlights the need for a multidisciplinary approach to AI auditing, incorporating expertise from technical, legal, ethical, socio-cultural, and other domains. This broader perspective distinguishes AI assurance practices from standard IT and security compliance audits, ensuring they address the unique and complex challenges of AI systems.

Much of the research underpinning technical and system-level evaluations for AI is still active and rapidly evolving. Areas such as AI safety, explainability, interpretability, adversarial attacks and defenses, and trustworthy machine learning are continually advancing with new methods, challenges, and tools. As a result, AI certifications and assurance mechanisms must remain flexible and adaptable to align with the latest developments. Additionally, the complexities and interdependencies of AI supply chains — encompassing development practices, data procurement and collection, models, tooling, and more — make transparency and accountability at all levels essential. To address the various levels where RAI practices are critical, organizations will likely rely on a combination of assurance mechanisms rather than stand-alone certifications. This approach ensures comprehensive oversight and adaptability in the face of emerging challenges.

Where Should Organizations Go From Here?

In the evolving AI landscape, the question of what exactly is being evaluated is a multilayered question, yet essential given the wide-ranging impact and risks posed by AI systems. Effective AI assurance mechanisms should fulfill the basic needs of organizations adopting AI solutions, and provide a certain level of confidence in their RAI practices across organizational and system levels. These include stakeholder trust, AI system reliability, accuracy, and trustworthiness, AI security, regulatory compliance, and overall business goals.

AI assurance must be grounded in robustf RAI practices. The absence of global standards has led many enterprises to adopt ad-hoc and inconsistent approaches. Decision frameworks for undergoing AI audits and assurance processes should also account for use case and sector-specific nuances, which are often overlooked in existing frameworks. While AI assurance is relevant for all organizations at any stage of their AI adoption journey, specifying these needs and priorities is critical for selecting the most effective mechanisms. Building a solid RAI foundation can help organizations understand their AI adoption and RI maturity levels, and consequently identify areas where specific AI assurance mechanisms are necessary.

Supercharging Third-Party AI Assurance

RAI Institute works with organizations across sectors to support their RAI journey and AI adoption. As part of this work, we create comprehensive standards and assessments for AI assurance implementation. In 2025, we are bringing together stakeholders for AI-specific procurement and supplier collaboration grounded in our previously published guidance on trustworthy AI procurement.

If you are an AI vendor providing an AI platform, and are interested in participating in our upcoming initiative regarding AI vendor and supplier assessments, reach out to us through this form.

About the Responsible AI Institute

Founded in 2016, Responsible AI Institute (RAI Institute) is a global and member-driven non-profit dedicated to enabling successful responsible AI efforts in organizations. We accelerate and simplify responsible AI adoption by providing our members with AI assessments, benchmarks and certifications that are closely aligned with global standards and emerging regulations.

Members include leading companies such as Amazon Web Services, Boston Consulting Group, Genpact, KPMG, Kennedys, Ally, ATB Financial and many others dedicated to bringing responsible AI to all industry sectors.

Media Contact

Nicole McCaffrey

Head of Strategy & Marketing

Responsible AI Institute

+1 (440) 785-3588

Connect with RAI Institute