By Steve Mills, Ashley Casovan, Var Shankar

Introduction

Given the rapid advancement and adoption of AI systems to deliver value across industries, there have been increasing calls from organizations, workers, and governments to provide necessary oversight for these technologies. As organizations continue to leverage AI to augment research, service delivery, marketing, and operations activities, incidents of AI behaving poorly continue to be identified.

AI systems are raising concerns related to bias, data ownership, and privacy, accuracy and cybersecurity, which present serious risks, with impacts ranging from discrimination to lack of consumer trust to material and physical harm. Establishing and implementing AI Governance can mitigate these potential harms, allowing organizations to realize the full potential of AI.

Still an emerging area of practice, AI Governance incorporates instruments ranging from laws to voluntary organizational practices. AI Governance efforts are becoming foundational to the safe, secure, and responsible development and deployment of AI systems. Recent analysis indicates that responsibility for AI Governance should ultimately sit with CEOs, since AI Governance includes “issues related to customers’ trust in the company’s use of the technology, experimentation with AI within the organization, stakeholder interest, and regulatory risks.”

AI Governance is an emergent but rapidly evolving field that’s being shaped by lawmakers, civil society organizations, legal experts, and industry leaders. As it can be difficult to know where to start when seeking to establish an AI Governance practice, this paper provides a high-level guide to the various governance mechanisms to help business leaders navigate this complex and rapidly evolving landscape.

Navigating AI Governance

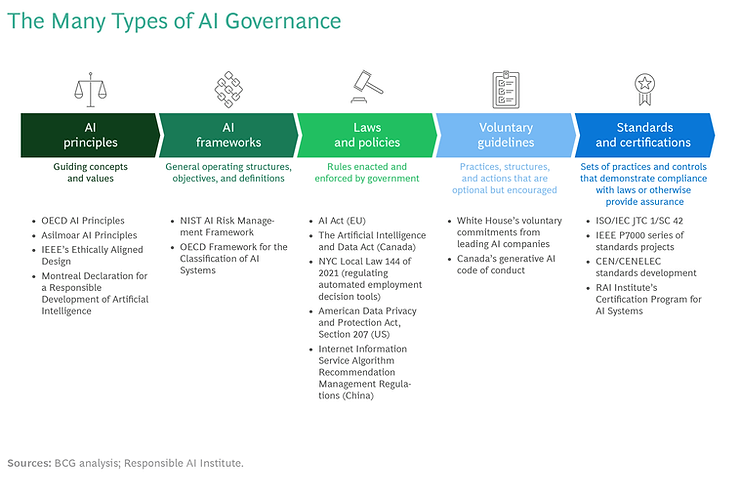

When establishing an AI Governance practice, it’s important to understand the scope and purpose of each AI Governance instrument. The figure below summarizes the different types of AI governance mechanisms, which are detailed further in the sections that follow.

AI Principles

From 2016 to 2019, governments and international organizations, such as the Organization for Economic Cooperation and Development (OECD), identified key issues related to the increased deployment of AI systems. Recognizing that AI is a socio-technology and therefore carries ethical risks in addition to traditional risks, governments and international organizations undertook multi-stakeholder processes to develop principles to guide AI development and use.

Foundational work such as the OECD’s AI Principles, Asilomar Principles, IEEE’s Ethically Aligned Design and the Montréal Declaration have all set viable guardrails and inspired other AI Governance mechanisms such as laws, corporate policies, and standards. These typically include themes such as fairness and equity, privacy, social and environmental impact, transparency and explainability, accountability, and human intervention.

While establishing principles was necessary to start the discussion around what good oversight for AI looks like, these principles are high-level, aspirational statements. Practitioners designing and building AI products often require guidance on how to implement them in a reasonable manner and how to manage potential trade-offs between principles.

AI Governance programs at organizations should develop AI principles based on an existing set of principles, such as those mentioned above, or align with an existing set of principles. Each organization’s principles should be prioritized based on the industry, domain, and types of AI systems being deployed. They should also be linked to organizational missions and values. AI principles should be used as a guiding light to provide direction regarding both what is important and the types of AI systems organizations should pursue.

AI Frameworks

Recognizing the need to move beyond principles, many organizations have started to develop frameworks for AI oversight. The most prominent of these is NIST’s AI Risk Management Framework (AI RMF).

Often confused as a standard to comply with, the AI RMF is meant to provide organizations with a guiding structure to operate within, outcomes to aspire toward, and a common set of AI risk concepts, terms, and resources. While it does categorize potential AI-related harms to people, organizations, and ecosystems, the AI RMF does not define a specific set of guidelines or practices for the development of AI systems.

Similarly, work such as OECD’s Framework for the Classification of AI Systems builds upon the OECD’s AI Principles to create level-setting within the AI community. This includes establishing definitions for certain aspects of AI and identifying the components of an AI system.

Though laws and regulations do not require adherence to them, AI frameworks provide important terms and reference materials to ground research and enable community learning and collaboration. In the future, they may inform or become referenced in mandatory regulations and procurement requirements.

Laws and Policies

As the chair of the US Federal Trade Commission said in April 2023, “There is no AI exemption to the laws on the books.” Existing laws and policies, whether general, issue-specific, or industry-specific, apply to the use of AI systems. The interactions of existing laws with the use of AI systems need to be incorporated into AI Governance programs.

In some areas, this can be difficult. For example, the intellectual property and privacy aspects of Generative AI training are subject to various interpretations by legal experts. In such areas, monitoring certain high-profile intellectual property-related lawsuits—including two proposed class action lawsuits by coders and artists—could shed light on the legal issues at play and how courts might view them.

Governments are also adopting legislation specifically related to AI use, such as New York City’s law on the use of automated employment decision tools. National governments generally have adopted a sector-specific approach, a horizontal approach, or a combination of the two, such as in Canada.

The US, UK, and Japan employ a sector-specific approach, generally by incrementally building upon and applying existing sector-specific laws to AI systems. In the US, the White House published a non-binding AI Bill of Rights in October 2022, which federal agencies have been interpreting to guide their application of existing regulations to AI systems. In April 2023, the US Civil Rights Division of the United States Department of Justice, the Consumer Financial Protection Bureau, the Federal Trade Commission, and the Equal Employment Opportunity Commission jointly committed to applying existing regulations to prevent bias and discrimination in automated decision systems.

Perhaps the most prominent example of a horizontal approach to AI law, the EU’s AI Act, which covers all AI use cases independent of their industries, has been under development since 2021 and is likely to be passed in some form by 2024. Its horizontal approach seeks to protect people from all types of AI systems; however, it also creates a dependency on additional tools such as impact assessments and sector- and context-specific standards.

Significant questions remain regarding the specificity of draft AI laws. Concerns such as responsibility between developer and deployer, what is considered a high- or limited-risk system, the treatment of foundational models, enforcement mechanisms, and the lack of global coordination remain significant barriers to finalizing and bringing AI laws into force.

Voluntary Guidelines

As mandatory AI-specific laws and policies remain under development in most jurisdictions, governments are working with industry and civil society organizations to develop voluntary guidelines.

In July 2023, the White House described voluntary commitments that it had secured from leading AI companies. In August 2023, the government of Canada published its draft Guardrails for Generative AI — Code of Practice aimed at oversight over Generative AI systems.

Many organizations look at voluntary guidelines as both sources of industry best practices and an indication of policymakers’ current thinking about AI.

Standards

Given the high-level nature of AI Governance instruments discussed so far, practitioners often look to standards to provide applicable definitions, objectives, and evaluation measurements for AI systems. Standards-development bodies, including ISO/IEC, IEEE, ITU, CEN-CENELEC, and ETSI have all developed AI standards. AI standards under development range from high-level, such as AI Governance methods, to context-specific, such as establishing ways to evaluate bias in recommender systems. With hundreds currently in development, it is difficult to understand which to use when.

Organizations and governments look to certain families of standards as benchmarks to show compliance with regulatory requirements. For example, many organizations expect that EU lawmakers will consider meeting certain standards developed by the ISO/IEC JTC 1/SC 42 as a method of demonstrating compliance with some of the AI Act’s requirements. However, most standards will remain voluntary and not adopted merely to show compliance with regulations, but rather will be used by organizations as an authoritative source of best practices.

Efforts are underway at organizations such as the Responsible AI Institute to help simplify the navigation of these standards by instituting certification programs that reference relevant standards, thereby creating a one-stop review process for AI systems. Organizations can pursue these certifications to demonstrate to customers, industry partners, and regulators that their processes and/or products conform to the underlying standards.

Organizational AI Governance

Whether your organization is building, buying, or supplying AI, establishing a Responsible AI program specific to an organizational context is vital. Regardless of the maturity of an organization’s involvement in AI, it is imperative to set up these governance practices, as lapses of AI products can have serious implications for a business, even if they are procured from a supplier.

A comprehensive Responsible AI program involves a cross-functional team working to develop mechanisms such as principles, policies, governance for review and decision-making, processes for product development, risk reviews, and continuous monitoring, data, and technology to support Responsible AI in terms of design and a culture of responsible use among staff.

These practices help mitigate internal risks and potential risks to customers and help businesses prepare for compliance with forthcoming AI regulations. They also provide mechanisms to ensure that the AI organizations build and use is consistent with their corporate values and corporate social responsibility commitments.

How to Bring It All Together

Given AI’s growing role in the economy and the drafting of AI laws and policies, developing an AI Governance program is an urgent need at every organization. Our research suggests that it takes organizations two to three years to reach the highest levels of organizational Responsible AI maturity, highlighting that organizations must begin now to ensure that they are prepared to comply with AI laws once they are in force. While the requirements of laws, policies, and other instruments are still to be finalized, organizations can be confident that organizational governance, policies, and processes will be needed to meet regulatory requirements.

Building a comprehensive Responsible AI program takes time and resources, but several simple steps enable organizations to make rapid progress. Begin by forming a committee of senior leaders who can oversee development and implementation. Their first task is developing principles, policies, and guardrails that govern the use of AI throughout the organization. These provide the foundation upon which the rest of the Responsible AI program can be built. The next step is creating linkages to existing corporate governance functions (e.g., risk committee), ensuring there are clear escalation paths and decisions rights while avoiding inadvertently creating a shadow risk function. Finally, develop an AI risk triage framework to identify inherently high-risk applications that merit deeper review and oversight. These initial steps help organizations gain the knowledge and basic suite of tools they need to develop processes to both protect their customers and adhere to future compliance requirements.

About The RAI Institute

The Responsible AI Institute (RAI Institute) is a global and member-driven non-profit dedicated to enabling successful responsible AI efforts in organizations. The RAI Institute’s conformity assessments and certifications for AI systems support practitioners as they navigate the complex landscape of AI products.

Media Contact

For all media inquiries please refer to Head of Marketing & Engagement, Nicole McCaffrey, nicole@responsible.ai.

+1 440.785.3588.

Social Media