In 2018, when working for the Government of Canada, I had the opportunity to lead the development of the Directive on Automated Decision Making. This policy was the first of its kind, working to set guardrails for the government’s use of AI. Seeking a balance between our mandate to protect the public and operational experimentation, we developed a principles-based policy informed by our understanding of AI use, early research, and public sentiment.

While at this time we saw more Robotic Process Automation than deep learning, the writing was on the wall. AI was going to change how the government worked and how all industries would work going forward.

Canada was early to the oversight game, but not the only government thinking about the social implications of these technologies. With the ability to predict who gets an expedited study visa, gets hired, or increased access to credit, the potential harms of these systems were real. Policy makers all over the world, international organizations, industry experts, ethicists, and safety researchers alike were addressing their concerns through the development of principle-based reports and recommendations related to how AI should be distributed, managed, and used given their social implications.

In addition to the Directive, early and important work included the Asilomar Principles, OECD’s AI Principles, IEEE’s Ethically Aligned Design, and the Montreal Declaration. However, having first hand experience trying to implement these high-level guardrails, it became clear that we needed to go a level deeper (or a few) to understand how these principles can be implemented in practice. Another observation is that while we use AI as a general term, it encompasses a lot of different technologies and has many different implications based on its use. This is why I joined the Responsible AI Institute (RAI Institute) where I set out to understand how to implement these principles in practice.

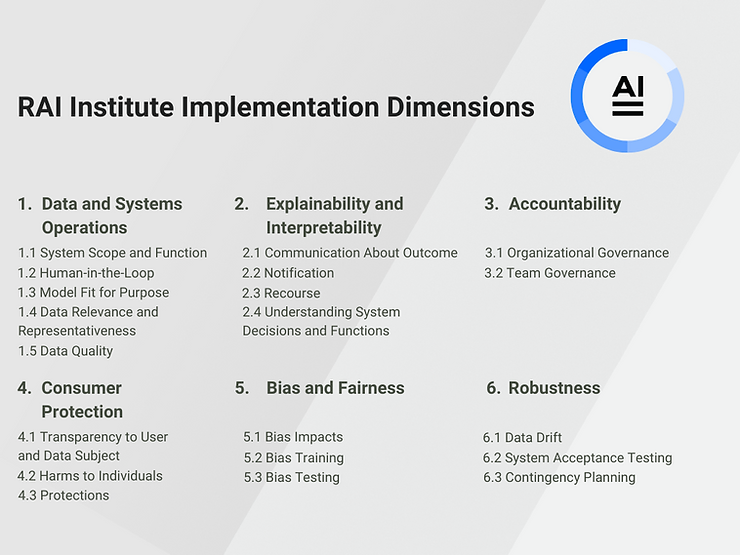

Along with an incredible group of volunteers, when I joined RAI Institute, I mapped these principle-based documents to create a framework for how to evaluate an AI system. Deciding to ground these efforts on OECD’s AI Principles, we leveraged a multi-stakeholder group with experts ranging from academia, industry, government, and civil society to develop a responsible AI assessment framework. While we did not want to be the arbiter of what is acceptable and not, it was, and remains, important for practitioners who are developing, deploying, and using these systems to know that they are safe and trustworthy. Tested and validated against multiple use-cases from health care, financial services, and HR, our framework contains 7 dimensions, 13 sub-dimensions, and approximately 100 questions, linked to standards where possible. It has become a comprehensive evaluation tool for organizations building, buying, and supplying AI to use in advance of deployment. To have the confidence that they are adhering to developing AI regulations and doing their due diligence to keep their organization and customers safe.

Since our early work, many organizations have developed their own responsible AI frameworks. These frameworks range from high-level principles to industry-specific guidance. As a casual observer of the evolving responsible AI community, you might be heartened to see how much work has been done in such a short period of time to ensure harm from these systems is mitigated. However, as a practitioner, knowing which framework to use can be overwhelming.

Our interest at RAI Institute is to help practitioners navigate and know how to implement responsible AI practices in an easy to understand way. We don’t want to add to the confusion. This is why we have decided to align our framework with arguably the most authoritative and adopted AI framework, NIST’s AI Risk Management Framework (AI RMF).

We have previously written an article with Reva Schwarz from NIST outlining what the AI RMF is and how its companion tools can be used within your organization. This alignment allows for the harmonization of related efforts as we have common objectives for AI systems to be built in a safe, responsible, and trustworthy manner. By using our assessment, organizations will have a simplified way to know how to adhere to the concepts in NIST’s AI RMF as well as emerging standards like ISO/IEC Artificial Intelligence – Management Systems (42001).

Simply put, the NIST AI RMF establishes and enumerates objectives to achieve and the RAI Institute’s assessment guides how to achieve these objectives.

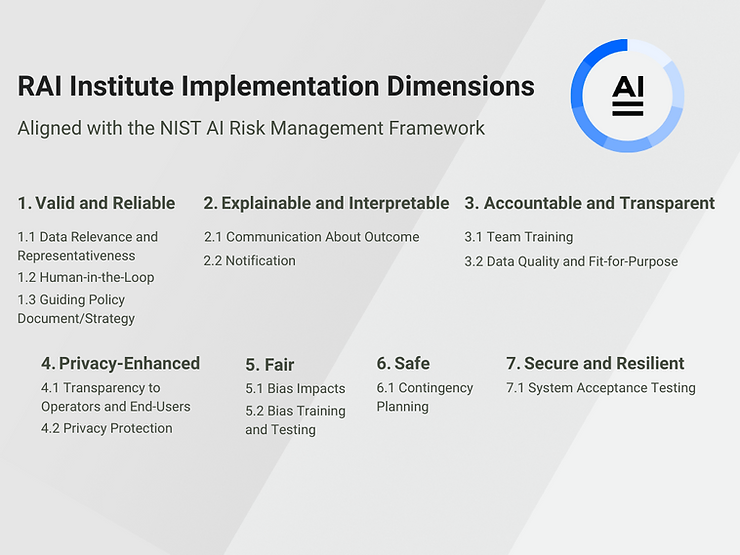

The following map shows how we have mapped our previous framework’s dimensions to the NIST AI RMF dimensions. The sub-dimension names listed here do not comprehensively describe the contents of the assessment.

Current RAI Institute framework dimensions:

Previous RAI Institute framework dimensions:

We will continue to evolve our framework in a pragmatic way and to lead the way in advancing responsible AI adoption.

About Responsible AI Institute (RAI Institute)

Founded in 2016, Responsible AI Institute (RAI Institute) is a global and member-driven non-profit dedicated to enabling successful responsible AI efforts in organizations. RAI Institute’s conformity assessments and certifications for AI systems support practitioners as they navigate the complex landscape of AI products.

Members include leading companies such as Amazon Web Services, Boston Consulting Group, ATB Financial and many others dedicated to bringing responsible AI to all industry sectors.

Media Contacts

Audrey Briers

Bhava Communications for RAI Institute

+1 858.314.9208

Nicole McCaffrey

Head of Marketing, RAI Institute

+1 440.785.3588

Follow RAI Institute on Social Media

X (formerly Twitter)