Last updated October 20, 2023.

TLDR: Released in April 2021, the draft EU AI Act (AIA) proposes a horizontal regulation for AI systems that touch the EU. The AIA classifies AI use by risk level—unacceptable, high, and low/minimal—and describes documentation, auditing, and process requirements for each risk level. Since June 14th, the EU Council and Parliament have both finalized their positions on the draft. Experts estimate the law may pass as soon as Q4 2023. The bill versions have been subject to debate around risk categorizations, consumer protections, defining AI, general purpose AI, appropriate exceptions, how it affects innovation, and more. You can check out a detailed comparison of the three drafts here. The stated aim is to reach an agreement by the end of 2023.

Initially released in April 2021, the EU Artificial Intelligence Act (AIA) proposes a horizontal regulation for AI systems in the EU market. There have been three drafts of the Act thus far (21 April 2021 – Proposal first published by the European Commission; 6 December 2022 – EU Council adopted its common position; 14 June 2023 – EU Parliament adopted its negotiating position). This blog post will focus on the most recent draft and surrounding considerations.

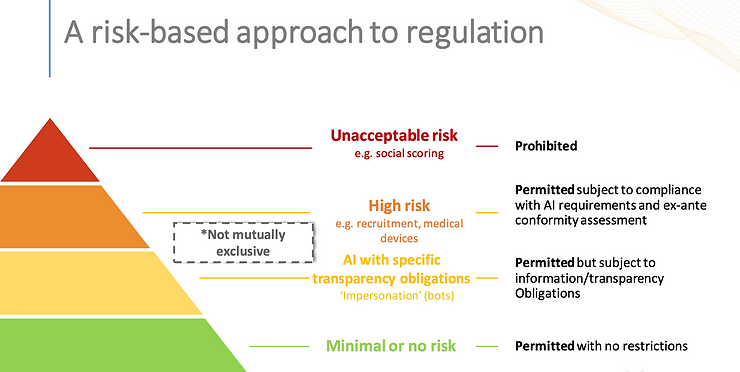

The draft AIA classifies AI use by risk level (unacceptable, high, and limited) and describes requirements for each risk level.

Source: https://www.ceps.eu/wp-content/uploads/2021/04/AI-Presentation-CEPS-Webinar-L.-Sioli-23.4.21.pdf?.

AI systems that are deemed unacceptably high-risk are banned outright, such as government-led social scoring. Meanwhile, high-risk systems—such as those used in predictive policing or border control—are subject to certain requirements like undergoing a conformity assessment or meeting monitoring requirements throughout their lifecycle.

AI systems deemed to entail low or minimal risks do not face the same obligations, though they are encouraged to meet them voluntarily.

Read on for an overview of the AIA and what it means for the AI landscape!

Why it Matters

There’s a lot of buzz surrounding the EU AI Act – for good reason. First, let’s establish why this proposal is so important, particularly for businesses, the public, and regulators.

Why it Matters for Businesses

In a word: Compliance

The AIA matters for businesses that use AI-based systems because it affects their compliance obligations and protects their bottom line. Businesses would be subject to this law if an output of their AI system is used within the EU, regardless of where the business operator or system is based.

The AIA underscores the need to be proactive in responsible AI efforts. The World Economic Forum reports that Responsible AI processes can take three years on average to establish, so businesses that began yesterday will be better off, for compliance, stakeholder satisfaction, and trust, harnessing the business benefits of AI, and more.

Why it Matters for the Public

In a word: Accountability

This landmark legislation is important for people living in the EU, consumers, and the general public beyond the EU since it sets the global bar for AI regulation. Responsible AI advocates worldwide will watch EU developments closely and model their strategies accordingly.

Why it Matters for Regulators

In a word: Precedent

As the first major AI regulation, the AIA will set a critical precedent for future AI regulatory approaches. Precedent-setting will relate to several aspects of the proposal, including:

- Applying a risk-based approach

- How broadly the bill will define AI

- Rights conferred to individuals, businesses

- Determinations of liability given the complexity of AI systems and decision-making

- How “future-proof” regulation can and should be

- The breadth of risk categorizations and banned AI use cases

- How interests of big tech and public interest are balanced

Why it Matters for Responsible AI

Due to AI’s widespread impacts on society, it’s critical that AI is designed, developed, and deployed responsibly with a keen eye on consumer impacts and potential harms.

Regulation is a key part of the RAI risk management ecosystem and governance landscape. The AIA represents a huge step in establishing legal requirements and enforcement mechanisms for AI systems.

To understand the goals of AI regulation, let’s take a step back and examine what responsible and trustworthy AI looks like. After research and validation by experts, the RAI Institute has put forth a framework for RAI used by our members worldwide, currently under review with the Standards Council of Canada (SCC). Our responsible AI Framework consists of seven dimensions, which should accordingly be represented in regulatory approaches to mitigating the dangers of AI:

Source: Responsible AI Institute

A common criticism of the EU AI Act is that it does not go far enough in outlining consumer protections or properly protecting the public from AI harm, bias, and unfairness. The provisions included in the ultimate version of the law will broadly affect global stakeholders and the RAI landscape.

What’s in the EU AI Act

In this section, we’ll look at an overview of the AI Act, its definitions, and requirements.

At a Glance

The proposed AIA consists of two documents: the proposal itself and a companion document with nine Annexes. Here’s your guide to skimming the dense 108-page document.

- Title I Scope and Definitions: Outlines the scope of the proposal and how it would affect the market once in place.

- Title II Prohibited AI Practices: Defines AI systems that violate fundamental rights and are categorized at an unacceptable level of risk.

- Title III High-Risk AI Systems: Covers the specific rules for AI systems that carry a high risk based on their intended purpose and relevant product safety legislation.

- Title IV Transparency Obligations for Certain Systems: Lists transparency obligations for systems that 1) interact with humans, 2) detect emotions or determine social categories based on biometric data, or 3) generate or manipulate content (e.g., “deep fakes”).

- Title V Measures in Support of Innovation: Adds to the innovation-friendly legal framework.

- Title VI Governance and Implementation: Sets up the Act’s governance systems at the national and EU levels.

- Title VII Governance and Implementation (cont.): Continues establishing the Act’s governance systems, including the monitoring function of the European Commission and national authorities.

- Title VIII Governance and Implementation (cont.): Establishes the reporting and monitoring obligations for AI system providers.

- Title IX Codes of Conduct: Outlines a framework encouraging the voluntary applications of the high-risk system requirements (to non-high-risk AI systems).

- Title X-XII Final Provisions: Establishes rules for delegation, implementation, and maintenance powers.

Definitions

The original text of the Act “proposes a single future-proof definition of AI.” That definition is:

“‘artificial intelligence system’ (AI system) means software that is developed with one

or more of the techniques and approaches listed in Annex I* and can, for a given set of human-defined objectives, generate outputs such as content, predictions,

recommendations, or decisions influencing the environments they interact with”

The December 2022 EU Council’s consensus proposal limits these to “systems developed through machine learning approaches and logic- and knowledge-based approaches.”

The original text of the AIA also describes AI as “a fast-evolving family of technologies that can bring a wide array of economic and societal benefits across the entire spectrum of industries and social activities.”

For more information on AI definitions, terminologies, and taxonomies, see the May 2023 EU-US Terminology and Taxonomy for Artificial Intelligence.

Under the proposal, AI is categorized by four levels of risk:

- Unacceptable risk

- High-risk

- Low or minimal-risk

Unacceptable Risk

The first category delineates which uses of AI systems carry an unacceptable level of risk to society and individuals and are thus prohibited under the law. These prohibited use cases include AI systems that entail:

- Social scoring

- Subliminal techniques

- Biometric identification in public spaces

- Exploiting people’s vulnerabilities

In these uses, the Act describes when and how exceptions may be made, such as in emergencies related to law enforcement and national security, a distinction subject to debate, which is described more in Key Points of Discussion below.

High-Risk

Requirements related to high-risk systems are at the crux of this proposed regulation, such as compliance with risk mitigation requirements like documentation, data safeguards, transparency, and human oversight. The list of high-risk AI systems that must deploy additional safeguards is lengthy and can be found in Art. 6, Annex III of the Act. These systems include those that do the following:

- Identify and categorize individuals based on their biometric data (e.g., Ireland’s use of facial recognition checks during the ID card application process)

- Determine people’s access to social services or benefits (e.g., France’s use of an algorithm to calculate housing benefits)

- Automate hiring decisions, such as sorting resumes or CVs

- Predict a person’s risk of committing a crime

Low-Risk

Low-risk AI systems have much fewer obligations providers and users must follow in comparison with their high-risk counterparts. AI systems of limited risk must follow certain transparency obligations outlined in Title IV of the proposal. Systems that fall into this category include:

- Biometric categorization, or “establishing whether the biometric data of an individual belongs to a group with some predefined characteristic in order to take a specific action”

- Emotion recognition

- Deep fake systems

Minimal-Risk

The language of the proposal describes minimally risky AI systems as all other systems not covered by its safeguards and regulations. There are no requirements for systems in this category. Of course, businesses with multiple kinds of AI systems will need to ensure compliance with each appropriately.

Who’s Liable?

Much of the AI. Act’s obligations are the responsibility of the party that places the AI system on the market (or makes a substantial modification to it), or the “provider.” The provider can be a third-party provider or the company that developed the AI. For example, distributors or importers of the AI system would be subject to verification obligations before making the AI system available on the market rather than the original provider of the AI system. AI systems that exist at implementation are exempt from meeting its requirements unless those systems experience a “significant” change in design or purpose.

Users of the AI system, meaning the individuals at a given company employing the high-risk AI system, are subject to responsible design, development, deployment, and monitoring requirements. User responsibilities include ensuring data quality, system monitoring and logging, following auditing procedures, meeting applicable transparency requirements, and maintaining an AI risk management system.

Obligations for High-Risk Systems

The AIA is a sweeping horizontal regulation that affects any provider or distributor of AI systems that touch the EU market, even if that business is not EU-based. Based on the Act’s AI system’s categorized risk level, all applicable businesses would have to meet various documentation, monitoring, and training obligations. The AIA outlines new obligations for AI’s use in employment, financial services, law enforcement, education, automobiles, machinery, toys, and medicine. Should the AIA pass, a wave of technical and regulatory requirements are coming for those who develop or use high-risk AI systems.

In this section, we’ll break those requirements down in further detail.

Requirements for high-risk systems fall into three main categories: conformity assessments, technical and auditing, and post-marketing monitoring requirements.

- Conformity assessments (to ensure compliance with the obligations of the AIA)

- Data bias safeguards

- Data governance practices

- Data provenance, meaning data and outputs can easily be verified and traced throughout the system’s life cycle

- Provisions for transparency and understandability

- Appropriate human oversight of AI systems

- Documentation requirements for all of the above

Conformity Assessments for High-Risk Uses

Ex-ante conformity assessments are a requirement for high-risk AI systems under the AIA. This means that in order to be placed on the market, AI systems in high-risk sectors must demonstrate compliance with the law.

For AI products that currently fall under product safety legislation, companies can meet the AIA’s requirements via existing third-party conformity assessment structures and frameworks. Those who provide AI tools that aren’t governed currently by regulatory frameworks will be responsible for conducting their own conformity assessments and documenting their system in the new EU-wide high-risk system database.

Technical and Auditing Requirements for High-Risk AI

Next, those responsible for high-risk AI systems must meet technical requirements related to the design, development, deployment, and maintenance of AI systems as well as auditing documentation requirements.

- Creating and maintaining a risk management system throughout the lifecycle of the AI system;

- Quantitatively driven testing of the system to ensure the system’s consistent performance aligns with its intended purposes, identify risks, and undertake appropriate mitigation measures;

- Establishing effective data governance controls to require that training, testing, and validation datasets are error-free, representative, and complete;

- Documenting algorithmic design, system architecture, system logging, and model specifications;

- Designing systems with transparency in mind so that users can understand and interpret the AI system’s output;

- Incorporating human oversight into the system design to minimize harm, including allowing users to access an override or off-switch functionality.

Post-Market Monitoring for High-Risk AI

Once high-risk AI systems are on the market, providers are also subject to post-market monitoring obligations. In the event of serious incidents of the AI system, such as those that infringe upon fundamental rights or breach safety laws, providers of the system must report the incident to the national supervisory body. If a system violates the Act, regulators can require access to the source code, and the AI system can be forcibly withdrawn from the market.

Oversight & Enforcement

To oversee enforcement, the AIA creates a structure similar to the GDPR. Member state regulators would then be responsible for enforcement and sanctions.

To advise regulators, the AIA would establish a European AI Board comprised of representatives from member states and the Commission. This Board will weigh in and issue recommendations on “matters related to the implementation of this regulation.” Furthermore, the Board can invite external experts and observers to attend its meetings and hold exchanges with interested third parties.

Penalties

Penalties for violating the AIA will apply extraterritorially to parties outside of the EU if the system output occurs within the EU, similar to the General Data Protection Regulation (GDPR).

Failing to comply with the rules around prohibited uses and data governance is punishable by a fine of up to €30M or 6 percent of worldwide annual turnover (whichever is higher). For high-risk AI systems, the upper limit is €20M or 4 percent of turnover.

Failing to supply accurate and complete information to national bodies can result in a fine of up to €10M or 2 percent of turnover.

Key Points of Discussion

Today, there are thousands of amendments to this monumental legislation and thus many points of debate as the draft Act takes shape and regulators balance the interest of big tech, government, and public stakeholders in and out of the EU.

Here are the main points of contention:

Regulating Generative AI, including Large Language Models (LLMs)

With the recent explosion in generative AI, it’s no surprise that there’s been wide demand for the EU AI Act to account for the regulation of these technologies. This includes general purpose AI (GPAI), generative AI, and foundational models which were not included in the original draft text.

The EU Parliament and Council have generally opted for establishing separate rules for these AI systems. Under the current language, providers of foundation models must meet certain risk mitigation requirements before going public with models. These requirements include data governance, safety checks, and copyright checks for training data. Providers must also account for risks to consumer rights, health and safety, the environment, and rule of law.

Discussion on this regulatory approach has centered on scoping these AI use cases, balancing innovation with risk mitigation, and ensuring transparency.

Rights and Redress

In a nutshell, advocates are saying that the Act must go further in its consumer protection provisions.

As it’s written, the Act disincentivizes irresponsible AI through steep fines. However, a significant point of criticism from consumer protection advocates is that the Act fails to include redress for the people impacted by those AI systems or account for the impacts of existing technological inequities, such as digital divides.

As it currently stands, the AIA fails to contain any provisions for individual or collective redress, confer individual rights for people impacted by the risks, or establish a process for people to participate in the investigation of high-risk AI systems and their impacts.

To codify these rights, members of Parliament (MEPs) are advocating for rights and redress mechanisms, including the right to lodge complaints with a supervisory authority, the right to an explanation for the high-risk AI system’s decisions, and the right not to be subject to a non-compliant AI system.

The Act plans to establish a database for the registration of high-risk AI systems that are put on the EU market. Currently, this database does not include information on the context of the system’s deployment. Co-rapporteur Brando Benifei and other MEPs are calling for a requirement to also register the application of a high-risk system when the system user is a public authority or someone acting on their behalf.

Definition of AI

Given how this law can potentially affect millions of people and future approaches to AI/ML regulation, terminology discussions are rife throughout the document. First and foremost, the definition of artificial intelligence itself and the scope of the systems subject to the law have been subject to an ongoing debate.

The challenge for regulators in approaching terminology decisions is that any definition of AI, high-risk, or similar terms must balance specificity with being broad enough to be future-proof despite a rapidly-evolving field and successfully capture the risks associated with AI systems in a few years’ time.

Regulators are also grappling with how to regulate AI systems that do not have a specific purpose. Specifically, MEPs have confirmed proposals to enact stricter requirements on foundation models, a sub-category of General Purpose AI that includes the likes of ChatGPT.

Risk Categorizations

Another important debate around definitions centers on the risk categorizations and definitions present in the Act.

Human rights advocates argue that any AI systems that threaten human rights in ways that cannot be effectively mitigated ought to be banned.

Prohibited Uses

One area where advocates feel the Act does not go far enough is in the number of use cases that would be prohibited, particularly biometric and surveillance-related uses. For example, in April 2023, Amnesty International released an open letter to the EU Parliament to protect human rights and ban the use of discriminatory systems to protect migrants, refugees, and asylum seekers.

A wide body of research warns of the risk of biometric and surveillance practices, particularly for people of marginalized backgrounds. Currently, the Act includes language banning social scoring, a system of assigning an individual a trustworthiness credit score based on various behavioral data which can impact their ability to get a loan, access public benefits, or board a plane.

The use of AI in law enforcement is a hot topic of discussion given the serious risk involved in decision-making around carceral mechanisms of policing, incarceration, and surveillance. Currently, the act restricts law enforcement’s use of facial recognition in public places. However, there is room in the current writing to allow biometric mass surveillance practices in a broad range of situations, such as finding missing children or identifying the suspect for a criminal offense punishable by a sentence of three years or more.

EU Parliament co-rapporteurs Dragoş Tudorache and Brando Benifei are in favor of adding predictive policing to the list of banned uses as it “violates human dignity and the presumption of innocence, and it holds a particular risk of discrimination.” Germany is one of few member states that support a stronger ban and prohibiting facial recognition in public places.

Exceptions for National Security, Defense, and Military Purposes

The December 2022 EU Council consensus draft of the AIA states that AI systems used for national security, defense, or military purposes are outside the scope of the regulation. Furthermore, as noted above, there are many exceptions to requirements for law enforcement uses when they use AI to detect and prevent crime. Some advocates are concerned about how these exclusions can result in harm such as by allowing autocratic governments to use social scoring or biometric mass surveillance under the guise of “national security.”

Broader AI Regulatory Context

Proposed by the European Commission, the AIA represents the first-ever legal framework on AI, intended to address the risks of AI and promote trustworthiness. It’s also intended to position Europe as a global leader and “an ecosystem of excellence in AI.” The Regulation is also key to building an ecosystem of excellence in AI and strengthening the EU’s ability to compete globally. It goes hand in hand with the Coordinated Plan on AI.

The AIA is taking shape amidst other global AI regulation and data protection developments. Here are just a few to be aware of:

- China’s Interim Measures for the Management of Generative Artificial Intelligence (AI) Services – In Effect

- US Algorithmic Accountability Act – Proposed

- Canada’s Artificial Intelligence and Data Act (AIDA) – Proposed

- Canada’s Directive on Automated Decision-Making – In Effect

- EU AI Liability Directive – Proposed

- US American Data Privacy and Protection Act – Proposed

- China’s Internet Information Service Algorithmic Recommendation Management Provisions – In Effect

- UK Algorithmic Transparency Standard – Proposed

- China’s Provisions on the Administration of Deep Synthesis Internet Information Services – In Effect

EU Legislative Process

So when might we actually expect this landmark law to pass? We may see passage as soon as 2023 or 2024. Let’s review the AIA timeline and what needs to happen before it can come into effect.

History

In April 2021, the EU Commission released its proposal for the AIA after which the EU Council and Parliament began working on amendments to the bill. A reconciled version of the bill approved by both bodies is expected to materialize in 2023 or 2024.

Within the EU Parliament, two committees are leading negotiations: the Internal Market and Consumer Protection (IMCO) and Committee on Civil Liberties, Justice, and Home Affairs (LIBE).

In April 2022, co-rapporteurs Brando Benifei and Dragoş Tudorache shared their draft report on the AIA.

After this draft was shared, Members of the European Parliament (MEPs) tabled over 3,000 amendments which were to be merged and voted on within committees in October. At the time of writing, this has not yet occurred, and the original schedule is delayed.

An EU Parliament plenary session to vote on their version of the bill was postponed from November 2022 to January 2023.

In December 2022, the European Council formally adopted its position on the Act.

After a key April 2023 vote, the EU Parliament has reached a provisional political deal on the Act. It finalized its negotiating position on 14 June 2023.

Now, the two bodies can enter inter-institutional negotiations. or trilogues, to develop the final text for a vote. The amended bill will then be subject to scrutiny by the Council of Ministers.

Implementation

Once finally passed, there will likely be a lag time between when the regulations come into force, or legally exists, and when they can be applied, or when it’s enforceable and exercisable.

This long grace period, called vacatio legis, gives relevant parties—Member States, authorities, system operators, license holders, organizations, and all other addressees or beneficiaries of the regulation—an opportunity to prepare for compliance. This means reviewing their AI systems, processes, documentation, policies, and culture to accommodate the requirements of the new law and remain competitive and compliant in the global market.

This 2-year grace period doesn’t mean that businesses can entirely breathe a sigh of relief and deprioritize planning for compliance until years down the line, however. Proactivity is still critical in optimizing responsible AI governance as starting early only maximizes business outcomes and research shows that RAI processes can take five years on average to establish.

Rather than scramble at the last minute to meet bare minimum requirements as AI regulation catches up, our work consistently proves the benefit of starting where you are and investing the effort to build RAI the right way ahead of regulation, and the time to start is now.

Furthermore, some have noted that this delay period increases the risk that the Act’s provisions will be outpaced by technological advancements before they apply, so some are in favor of reducing this gap.

Staying Informed

To be in the know about the direction of the EU AI Act, there are a few great resources we recommend for awareness and a diversity of perspectives:

- EU AI Act Newsletter from the Future of Life Institute you can subscribe to and receive biweekly in your email inbox

- Website dedicated to the EU AI Act and updates

- Major news website Euractiv has an “AI Act” topic you can follow

There are also useful AI tracker resources from the OECD’s Live Repository of National AI Policies and Strategies, the International Association of Privacy Professionals’ Global AI Legislation Tracker, the Brennan Center for Justice’s AI Legislation Tracker, and Stanford’s 2023 AI Index.

Final Thoughts

In summary, the EU AI Act is a critical piece of proposed legislation that will significantly affect consumers, businesses, and regulators. As 2023 comes to an end, we hope to see major developments in the law’s progress and in its response to stakeholder concerns.