By: Sabrina Shih & Var Shankar

What is the RAISE Corporate AI Policy Benchmark?

The RAISE Corporate AI Policy Benchmark provides a methodical way for companies to compare their Corporate Responsible AI policy to the Model Enterprise AI Policy from the Responsible AI Institute. The Model Enterprise AI Policy from the Responsible AI Institute maps to, and operationalizes guidance within, the NIST AI Risk Management Framework.

Why is the RAISE Corporate AI Policy Benchmark aligned with RAI Institute’s Model Enterprise AI Policy?

For seven years, RAI Institute has been a leader in providing its organizational members with authoritative AI guidance. The RAI Institute’s Model Enterprise AI Policy encapsulates the RAI Institute’s learnings over these seven years, reflects objectives from existing and emerging AI laws, guidelies, frameworks, and standards, and in particular, seeks to operationalize the elements of the NIST AI Risk Management Framework (AI RMF).

How did we map the RAI Institute’s Model Enterprise AI Policy to the requirements of the NIST AI RMF?

In the RAI Institute’s Model Enterprise AI Policy, the NIST AI RMF’s seven characteristics of trustworthy AI (valid and reliable, safe, secure and resilient, accountable and transparent, explainable and interpretable, privacy-enhanced, and fair with harmful bias managed) become Implementation Dimensions. The NIST AI RMF notes that these seven characteristics are both interdependent and often in tension with each other, and that addressing them individually is not sufficient to make AI systems trustworthy. For example, the AI RMF argues that a system must first be found valid and reliable before other characteristics can be addressed. That is, a system that does not consistently operate correctly under the conditions of expected use, cannot be accurately assessed for other characteristics like resiliency or fairness. Additionally, implementation of the characteristics will differ for each organization and use case; different characteristics will be prioritized based on context and tradeoffs between characteristics are common. The Model Enterprise AI Policy acts as a benchmark for performance on each characteristic while recognizing that an AI system is only as responsible as its most weakly implemented characteristic.

Under each of the Model Enterprise AI Policy’s Implementation Dimensions, mapped to one NIST AI RMF characteristic, the Policy also lists Key Objectives, which detail subtopics related to the implementation of a Dimension. For example, Data Relevance & Representativeness

and Data Quality & Accuracy are two Key Objectives (labeled 1.1 and 1.2 respectively) under the Dimension 1, Valid & Reliable. Each requirement listed in the Policy is assigned to one Key Objective.

Additionally, the Model Enterprise AI Policy maps to the NIST AI RMF functions: Govern, Map, Measure, and Manage. Each function has categories (e.g. Govern 1) and subcategories (e.g. Govern 1.1). Each requirement listed in the Policy is assigned to one function subcategory.

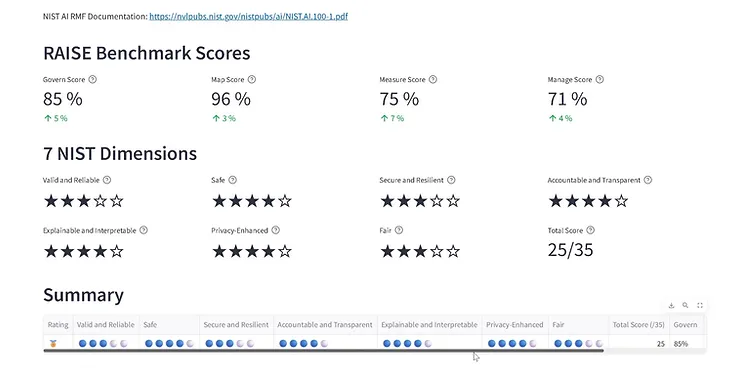

How can a person perform the benchmarking?

Each requirement of the Model Enterprise AI Policy is assigned to one Key Objective, a subcategory of an Implementation Dimension, and to one NIST AI RMF function subcategory. Therefore, each requirement falls under one NIST AI RMF characteristic and one NIST AI RMF function, and performance across each characteristic and function can be measured as the percentage of requirements that fall under each that are fulfilled. Presently, each requirement is assigned a Y (1 point) or N (0 point) but as the RAISE Corporate AI Policy Benchmark tool is further developed, scoring may become more complex. For example, requirements may be weighted greater than 1 point or be assigned to more than one Key Objective. In these cases, an internal rubric will be used to accurately assign weighted points to each relevant category. Ultimately, comprehensiveness along the seven NIST AI RMF characteristics and the four NIST AI RMF functions will be represented by a numerical score – a percentage, fraction (e.g. ⅗), and/or a rating.

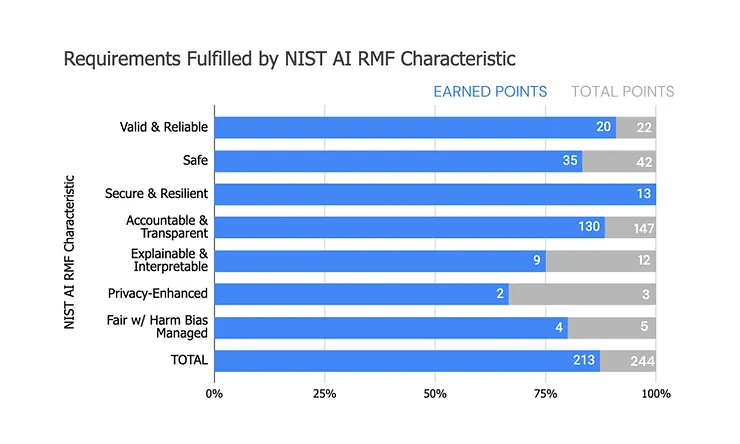

Figure 1: Sample output of fulfilled requirements by NIST AI RMF Characteristic

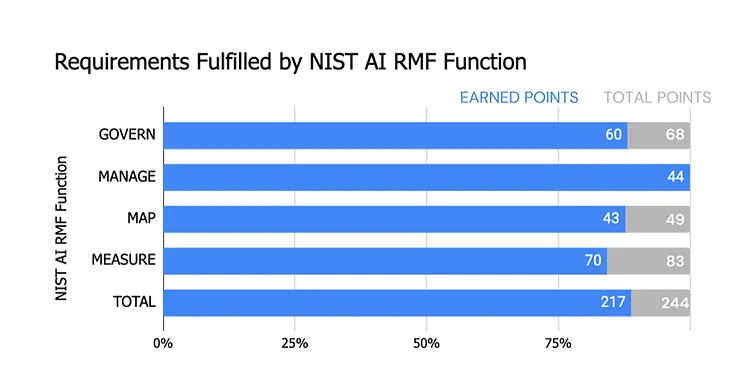

Figure 2: Sample output of fulfilled requirements by NIST AI RMF Function

How can organizations use RAISE Corporate AI Policy Benchmark to improve their AI policies?

Beyond the scoring overview, the RAISE Corporate AI Policy Benchmark will also identify the requirements that were missed, which will inform users of the gaps in their corporate policy that the Benchmark has identified. The Benchmark will also include a mechanism that will enable members to provide feedback when they feel like the Benchmark’s output is not accurate.

About The RAI Institute

The Responsible AI Institute (RAI Institute) is a global and member-driven non-profit dedicated to enabling successful responsible AI efforts in organizations. The RAI Institute’s conformity assessments and certifications for AI systems support practitioners as they navigate the complex landscape of AI products.

Media Contact

For all media inquiries please refer to Head of Marketing & Engagement, Nicole McCaffrey, nicole@responsible.ai.

+1 440.785.3588.

Social Media