When Robert Williams comes home one January night, he finds himself and his family swimming in the glow of red and blue lights from three police cars in their driveway. The police show him a piece of paper with the words “arrest warrant” and “felony larceny” on it, and tell him he’s a suspect in a crime. Robert WIlilams is then handcuffed in front of his family, taken to the police station, and told that he’s a suspect in a case about stolen watches. He eventually learns that the police were using a facial recognition tool and the tool misidentified him as the Black man who had actually committed the crime.

Facial recognition has been in the news for many similar issues, and many local municipalities either already have enacted or are considering a ban of facial recognition on some level, with the ACLU recently calling for a national ban on facial recognition tools for law enforcement. To be clear, facial recognition and other AI tools cannot be allowed to continue unchecked. They must be regulated – but while a universal ban on this tool may seem like the best way to do that, it’s likely not the best solution in the long term.

What happened to Robert Williams shouldn’t have happened. But we can use regulation on AI tools as a way to get at the larger, systemic biases that these tools not only uphold, but perpetuate – things a universal ban on just the tool wouldn’t fix.

We can make them work better.

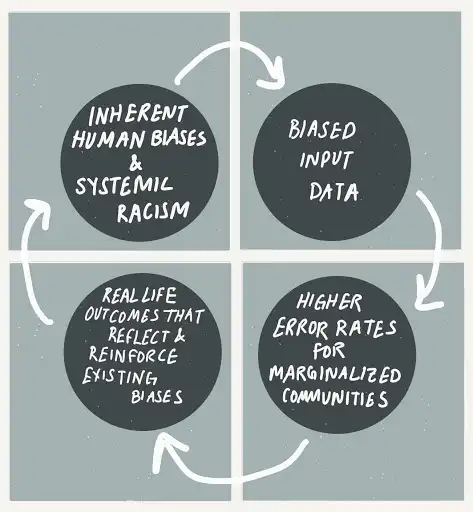

Part of the problem with facial recognition technology, as we see in Robert Williams’ story, is that these technologies are incredibly biased and have disproportionately high error rates when it comes to people of color, women, and young people. Part of this has to do with the datasets that these algorithms are using, part of it has to do with researchers not accounting for that bias in their algorithms, and part of it is because humans themselves are extremely biased when it comes to recognizing faces from a different racial or ethnic group than our own.

We like to think that technology is the great equalizer – that algorithms aren’t biased, that only humans are. But we’ve seen, time and time again, that these AI tools perpetuate the same biases and prejudices that we as humans hold, and before we can make any claims about the generalizability or usefulness of these technologies, we need to first address the inherent bias within them. If these technologies were better at handling Black faces as inputs, then we might not have the story of Robert Williams to talk about today.

When we’re thinking about laws and regulations around AI and facial recognition, we can make them work better.

We can use them to combat root causes.

Robert Williams’ story highlights another key point about this issue – as we’ve said, we need regulations on the use of facial recognition. But we also need to talk about the larger root causes around these systemic issues. Robert Williams’ story would be very different if the system and structure of policing in this country looked different. He even says in his story “As any other person would be, I was angry that this was happening to me. As any other black man would be, I had to consider what could happen if I asked too many questions or displayed my anger openly — even though I knew I had done nothing wrong.”

Systemic racism is a part of every single system in our society, and facial recognition is a tool that can be used in every single one of those systems – policing, healthcare, even education. The tool is only as good as the humans in charge of it. These tools perpetuate these biases because they operate within systems that perpetuate these biases.

We also see this with another potential use case – facial recognition as a barrier for school entry to mitigate the risk of school shooting. This at the highest level can seem like a positive use case, but when we look deeper to ask the larger questions, facial recognition here is solving a problem that doesn’t need to exist, and might not if we had stricter gun control in the country.

When we’re creating laws and regulations around these tools, we can use them to combat the root causes as well as the tool itself. We don’t have to view these new technology tools as something new or separate, and regulate them like new and alien things.

When we’re able to craft narrow laws that really get at the larger issues, and create levels of accountability and oversight that may not currently exist, we can work to not only mitigate harmful effects of the tool, but also truly reimagine these systems to combat the root causes of systemic biases.

We can make them about the private sector as well.

A lot of these bans don’t take into account other forms of biometric data like fingerprints, and also don’t have any effect on the private sector’s use of this data. We all now live in a world where we are constantly being surveilled.

For example, Apple now has a vast collection of facial data through it’s FaceID, and fingerprints through its fingerprint unlock function. Facebook and Google have been collecting information about us for years now for advertising, but have recently started doing biometric tracking through things like suggested friend tagging in pictures. Companies like 23andme have even started building out databases of DNA and genome data. .

A lot of these local bans don’t take into account the huge ways that the private sector has been dismantling our sense of privacy online and off, or their use of other types of biometric databases other than facial data. The law put forth in Congress recently does seem to be a ban on all biometric database usage by law enforcement, and that’s a step in the right direction, but simply opting for a permanent ban on law enforcement’s use of facial recognition doesn’t take all of these factors into consideration.

When we create these regulations, we can use them to think broadly about the ways that these tools affect our larger society, not just the public sector.

We can make them work for us.

Facial recognition can be used for many, many good things. It’s been used to identify missing children in India, can be used in disaster relief efforts, and more. We see with body camera usage on police officers that very rarely does the body camera footage lead to accountability for the police officers, and more often than not leads to a higher level of prosecution for their victims. This points to the larger systemic issue of accountability in the police force, but it doesn’t mean we should ban body cameras on police officers. It means we need to look deeper and more broadly about what the structural issues are, and create good rules – good rules that are also enforced.

Technology is a hugely powerful tool that can be used for so much good. We can use these new challenges and questions to rethink and reimagine how our current society is structured, not just ban them because they perpetuate existing biases.

How do we do this?

When we think about lowering error rates for marginalized folks in facial recognition software, we also need to think about whose faces are used in the training of these tools, and where that data is being collected from. For example, some tools only use mug shots or drivers’ licenses to create their training database. (But again, we need to think about how that is affected by larger systemic issues – with the overpolicing of Black and brown people, using mugshots would skew the algorithm in that way.) There’s also the consideration of privacy – are the people whose faces are being used consenting to this? Are they minors?

There’s also the external factors to consider when the tool is being used: when it’s being used by law enforcement, are there restrictions on which levels of law enforcement can use it? Is there a reason to prevent sharing between different agencies? Is the tool being used in real time, like in an airport, or only after the fact, as in the case of Robert Williams?

What level of accountability is there for when cases like his happen? Who is responsible? What toxic feedback loops are being perpetuated?

AI Global is working on all of these issues – and our Responsible AI Design Assistant walks you through all of these questions for your tool. You can also see more examples of rules and regulations in our upcoming Playbooks – stay tuned!

These issues affect real people every single day – Robert Williams isn’t the first one, and he won’t be the last one. AI, unchecked, can scale up the biases we already hold in ways that are currently unimaginable. We know this.

What it really comes down to is that putting specific regulations on facial recognition allows us the chance to reimagine the government’s control over our private information, and reimagine the trust and power we give law enforcement over our lives in exchange for safety. It allows us to put a structure of accountability and oversight that doesn’t exist right now. An overall ban misses those opportunities, and doesn’t challenge even the current biases and the way they’re being perpetuated. And as always, all of these regulations can and must go hand in hand with larger systemic reforms.

And in the meantime, we can continue working on smaller, minute changes that lead up to those larger systemic changes, making sure that we think holistically about how all of the different pieces fit together. Human biases fit into our algorithmic biases, but even beyond that, we need to be thinking about how these algorithms fit into our larger society – what are the data and privacy measures for this tool? What are the accountability systems put in place for when things go wrong? We must think about all of these things put together, because society is built on all of these factors. We can work to shape the society we want by the tools that we create and use.

Sources:

- https://www.sentencingproject.org/publications/color-of-justice-racial-and-ethnic-disparity-in-state-prisons/

- https://www.prisonpolicy.org/blog/2019/10/09/pretrial_race/

- https://www.prisonpolicy.org/blog/2018/10/12/policing/

- https://www.sentencingproject.org/publications/un-report-on-racial-disparities/

- https://www.propublica.org/article/how-we-analyzed-the-compas-recidivism-algorithm

- https://www.propublica.org/article/machine-bias-risk-assessments-in-criminal-sentencing