As organizations race to get their start in AI, many find themselves stumbling into the starting gate, unclear on what steps to pursue. AltaML provides organizations with much needed direction by prioritizing productivity or process challenges that can be solved with applied AI, and working as an invested partner, along with partner domain experts, to develop and deploy AI solutions and products. Its north star is to apply AI to elevate human potential. We recently had a conversation with AltaML, one of our first corporate members, on the joint effort to create a responsible AI governance structure. Co-CEO Nicole Janssen was among those represented.

How do you acquire clients and apply your technology solutions to their business models?

We work with clients that have problems within their organization that can be solved with AI and machine learning (ML), and then collaborate with them to figure out the main approach or structure to help them build out the proper tools. RAI Institute has helped us with the responsible side of our AI and ML programs, which, in our mind, are non-negotiables.

To build on that, AltaML operates as a corporate lab for companies that already have data and analytics capabilities, but need additional expertise and innovation that is hard to achieve internally. They are also looking for guidance as to how AI should be developed responsibly within their business.

What is driving the strategy of a Responsible AI (RAI) Governance Policy at AltaML?

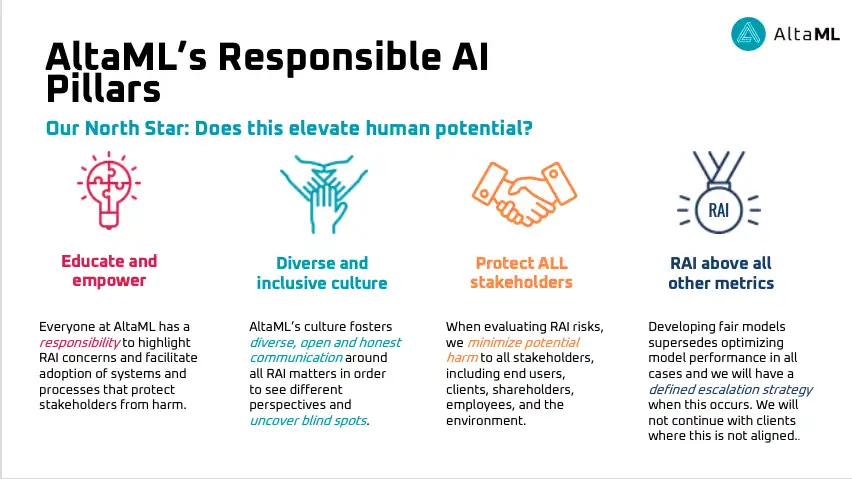

“Our purpose is to apply AI to elevate human potential, and to execute on that, we need an RAI governance policy. This is a work in progress that continues to be informed by our engagement with the RAI Institute. We have developed pillars for RAI governance, which are to educate and empower on RAI concerns, to have a diverse and inclusive culture to see different perspectives and uncover blindspots, to protect ALL stakeholders, and to hold RAI above all other metrics.”

-Nicole Janssen, Co-CEO, AltaML

Our massive goal is to impact one billion decisions by 2030, and when you talk about impacting those decisions at scale, we recognize that there is an ethical obligation that we have as a firm to operate responsibly. For us, this means we must ensure our data doesn’t reflect existing biases, to check our data for biases, and to elevate RAI above all other metrics. Our partnership with RAI Institute brings both the use of RAI’s expert tools, but also the credibility of having a third party, independent review board to help us develop AI responsibly. We’ve leveraged RAI Institute’s knowledge to develop our pillars of responsible AI, that align with the knowledge that we gain from our conversations with the RAI Institute and other partners.

RAII: What impact has RAI Institute had on your ongoing governance of responsible AI?

AltaML has been alongside the RAI Institute from the first iteration of the Responsible AI Design Assistant to now, running the tool through various real-life use cases and projects and extrapolating real-time strategies. The design assistant, education, and other RAI Institute tools have helped guide the AltaML practice thanks to the diverse perspectives used to create the tools. Our technical team has done a huge amount of work in deep diving into bias, unfairness, and mitigation strategies, but RAII has added another level of credibility and rigor to our practice.

“We want AltaML to be a recognized leader in responsible AI. That will take continuous improvement. This is very much an emerging field, and far from solved. We see ourselves not only implementing best practices internally but also taking on an advocacy role. Partnerships are key to success here, as different partners bring their different perspectives to the conversation. In working with the RAI Institute, which is impartial, we are advancing our own thinking around ethics and governance.”

-Nicole Janssen, Co-CEO, AltaML

RAII: You’ve really helped RAI Institute along the way as well, particularly in the refinement of our design assistant for real-world case studies.

We’ve helped RAII by offering insights on the industry side and providing an example of someone providing services for companies. We’ve also been included in sessions around designing the RAII Certification for more than just one type of system. In terms of crafting an overall governance structure for responsible AI in practice, the Design Assistant has given us a roadmap to success, with several questions to consider in the creation and implementation of systems in all of their scoring areas.

We bring a commercial, boots on the ground, perspective. We are really about the applied side, how to make things real to work in practice. There’s a lot to think through in terms of integrating RAI principles into business processes, so in working with the RAI Institute, both parties come away from discussions with greater insight, having considered issues from multiple perspectives, including from the more abstract to the more concrete practicalities.

RAII: Can you speak to some lessons learned along the way?

“I think we will always be learning lessons; this is a field where you can never be complacent and sit back. Anyone working in AI that is comfortable is just not doing it right–you need to always be considering implications, examining the potential for unintended harms. Our approach at AltaML includes steps to identify, explain and mitigate concerns. For example, what are the hidden biases that can affect data collection, interpretation and model building? How can explainable AI (XAI) be used to provide clarity around which particular model features are driving model outputs? What steps can be taken to mitigate concerns?”

-Nicole Janssen, Co-CEO, AltaML

To build off that, the biggest lesson is more of a takeaway: that this field is always evolving and moving. Responsible AI governance is never a one and done check mark, it is a growing and changing building of partnerships and sharing of knowledge. You consistently have to educate your clients and yourself to ensure that you have the right practices in place, and that’s what RAI Institute brings in terms of making sure that we have a third party objective lens around what RAI factors are important and what are some of the latest trends or pieces of legislation to consider.

From this, we’ve made sure that responsibility is cross functional, and not just an area for one team or department. Responsibility stretches from top to bottom across our organization, and since responsibility looks different to everyone, we communicate our diverse viewpoints and perspectives and work them into our governance structure.

RAII: Can you elaborate on that cross functional team? Is RAI a player in that?

Yes, we absolutely see the RAI Institute as a player on our cross functional team. We’ve never been of the mind that we have a complete skill set or knowledge base in-house; we look to partnerships to complement and broaden our expertise. We have this partnership approach to the applied AI that we do in industry, where we have some subject matter expertise in a given domain to some extent, but the deep subject matter expertise resides with the partner, and this is fundamental to the way that we collaborate. We take the same approach with responsible AI by engaging with organizations having deep subject matter expertise in RAI–in particular, the RAI Institute–and in so doing, we are building our own knowledge base.

“To add on to that point, collaboration is so essential that success in RAI will come from industry, academia, government and groups like the RAI Institute working together, bringing their various lenses and learnings to a greater shared understanding. Silos won’t be effective.”

-Nicole Janssen, Co-CEO, AltaML

If we determine there are risks that are insurmountable from a responsible AI perspective, we will not continue with the use case and are fully prepared to walk away from projects and clients where those values aren’t aligned. However, we found that in practice, this isn’t always black and white at the outset, and there is a lot of nuance and gray area surrounding right and wrong as it takes time for issues to come to light. Creating this cross functional RAII team internally inspires a culture where everyone feels responsible for the ethical implications of the work that we do, and is willing to speak up when they see ethical concerns.

Our partnership with the RAI institute also contributes to that cross functional teams and serves as a differentiator, both when engaging with potential members and when bringing on new projects. It shows that we are willing to invest significant time and resources into adding layers of rigor and credibility to our practices.

At the end of the day, our team at AltaML feels responsible for the impacts of our work and strives to protect all stakeholders from potential unintended impacts of the AI solutions we build. Our AI governance model is something that our team takes pride in and that we’ll continue to build, evolve, and grow upward.