By Ashley Casovan and Var Shankar

Generative AI has sparked inspiration, curiosity and a public debate like no other AI technology has to date. While the potential people have seen with tools like Chat-GPT and DALL-E has allowed us to move beyond comparing AI to the “Terminator,” the opportunities and the potential ubiquity of these systems has sparked an important debate about how they should be developed and allowed to function in society.

It also marks the first time that most of us working in AI have easily been able to explain to our parents what we do. “Yes, mom, it’s crazy that someone would think the Pope would wear Balenciaga.”

We have taken our time to weigh in on this debate to better understand how Generative AI can actually be used, not just prospectively, but in real applications. We have also paid close attention to the conversation surrounding Generative AI systems. An important element of this conversation has been an open letter published by the Future of Life Institute, which has gathered over 7,000 signatories and attracted significant media attention. The letter’s drafters believe that the research and development of Generative AI models should be paused. In this article, we want to break down our views of the opportunities and the implications of Generative AI.

Why is Generative AI different?

Generative AI models, which include large language models (LLMs) and text-to-image systems, have made it possible for people around the world, regardless of programming ability, to interact with cutting-edge AI systems for free. Capabilities based on this technology are being rolled out within productivity software by Google, Microsoft, Salesforce, and others, making them broadly available. Recent iterations, like OpenAI’s GPT-4, have also shown significant gains in translation across languages. The possibilities seem nearly limitless, from improved business productivity, to faster and better research and development, to improved compliance, to better services that improve people’s lives.

For a more complete run-down, we would suggest reviewing an easy-to-understand explainer from BCG on Generative AI models, the data used to power them, and some of their applications.

Though Generative AI systems like GPT-4 only became household names recently, serious efforts are underway to track and study them. For example, Stanford has created an interdisciplinary Center for Research on Foundation Models and the UK Government has set up a foundation models task force.

What are the implications of these systems?

While we are excited about Generative AI’s potential, we are also clear-eyed about its challenges.

Many risks of Generative AI are already well-known. They can ‘hallucinate’ by making up facts. They are biased. They were trained on the internet, raising significant intellectual property and privacy issues. Their environmental impacts are significant. While most of the world speaks non-English languages, LLMs based on Generative AI are largely focused on the English language and reflect the social mores encoded in English-speaking societies.

When they don’t work well, they can inspire unwarranted trust among users. For example, a group of Stanford researchers recently found that participants with access to an AI assistant “wrote significantly less secure code” but “were more likely to believe they wrote secure code” than those without access. However, the pace at which their capacities are increasing could result in major societal impacts soon: job losses, domination of the internet by machine-generated content, and the ability for small groups to influence democracy itself, by generating effective rhetoric, images, and videos to influence lawmakers and the public.

While there is a promise for Generative AI to democratize access to services and resources, only a few well-resourced organizations are at the cutting-edge of developing these systems. Therefore, important insights related to how these tools are being used and adopted can only be gleaned by a small handful of already-powerful tech companies. The CEO of Open AI, Sam Altman, has acknowledged that “in a well functioning society, I think this would have been a government project.”

Should there be a Generative AI pause?

Our organization’s focus is on being aware of issues that can arise from all types of AI systems, and to understand what strong and effective mitigation measures might look like. Given this background, we don’t believe that a pause in the research and development of Generative AI systems is possible, for a few reasons.

- Even if paused, research and development of Generative AI would likely proceed in secret. We believe that it is better to do this important work as openly and transparently as possible.

- The effectiveness of a pause is dependent on strong government oversight for AI, which is in its infancy. Absent strong government oversight, some parties – including bad actors – could advance Generative AI research even if others choose to pause.

- There are many beneficial research areas that use cutting-edge Generative AI – like predicting protein shapes and suggesting new drugs. Pausing these kinds of research would be a lost opportunity.

However, we do agree with our colleagues at the Future of Life Institute that researchers and independent experts should “jointly develop and implement a set of shared safety protocols for advanced AI design and development that are rigorously audited and overseen by independent outside experts” and “ensure AI developers work with policymakers to dramatically accelerate development of robust AI governance systems” including by developing a “robust auditing and certification ecosystem.”

How will RAI Institute approach Generative AI?

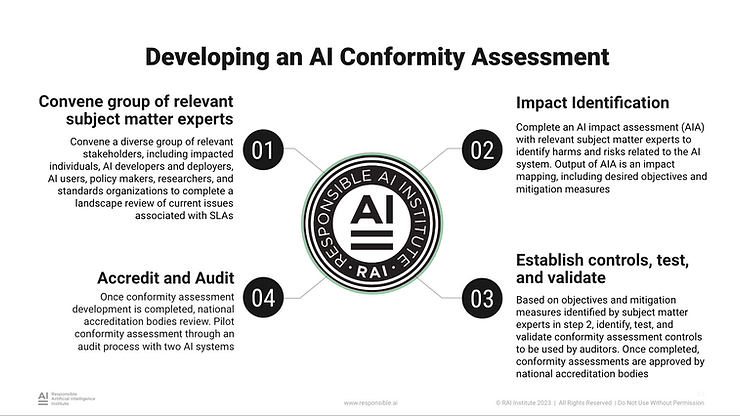

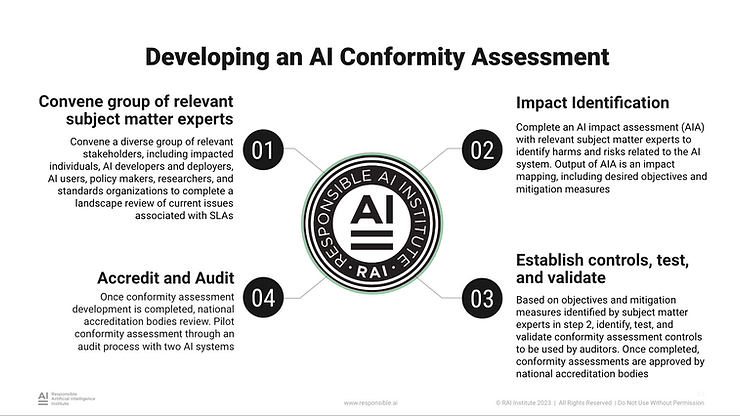

These calls align closely with how the Responsible AI Institute is approaching Generative AI issues, including through our advocacy around improved AI policies and government audit efforts and our development of a comprehensive conformity assessment scheme for AI systems informed by independent experts, which can be used to improve internal development processes or by independent auditors.

We continue to believe that it is important to urgently regulate the development of AI systems, including Generative AI systems, including at the use case level. The UK’s proposal to ban the use of non-consensual intimate images to generate ‘deepfakes’ is a good start. Lawmakers should spell out similarly robust measures for other harmful use cases of Generative AI systems.

As EU lawmakers consider regulating powerful and broadly applicable Generative AI systems as ‘General Purpose AI systems’ within the EU AI Act, we suggest considering both societal and context-specific issues.

Existing laws and enforcement powers already provide important safeguards on the use of AI systems. For example, in the US, the FTC Act prohibits deceptive or unfair conduct and the FTC clarified in a recent blog post that it intends to apply this standard to generative AI and synthetic media. European regulators are asking tough questions about privacy protection during the training and operation of Generative AI models.

Regulation should be bolstered by the development of guidelines, standards, and certification programs for the responsible use of AI systems, including those powered by Generative AI.

The below image shows the approach that we have taken to developing guardrails for AI systems, recognizing that the context of how these AI systems are being used, what technologies they are being built from, and where they are being deployed are all important factors to ensure effective oversight.

To apply this approach in the Generative AI context, we are assessing and integrating into our assessments the impact of Generative AI within our current sectors of focus, including health care, financial services, employment, and procurement. We also have under development two beta Generative AI consortiums, related to health care and synthetic media, respectively. Stay tuned for forthcoming information about these beta Generative AI consortiums.

Understanding and responsibly harnessing the power of Generative AI systems will take a concerted effort among policymakers, industry, academia, and civil society groups. Time is of the essence.